Program a "<YourUname>MMMedley" app that allows a user to load an image from the internet and scale it with a pinch gesture, play video, convert text to speech, enter text via speech, and trigger a simple custom animation.

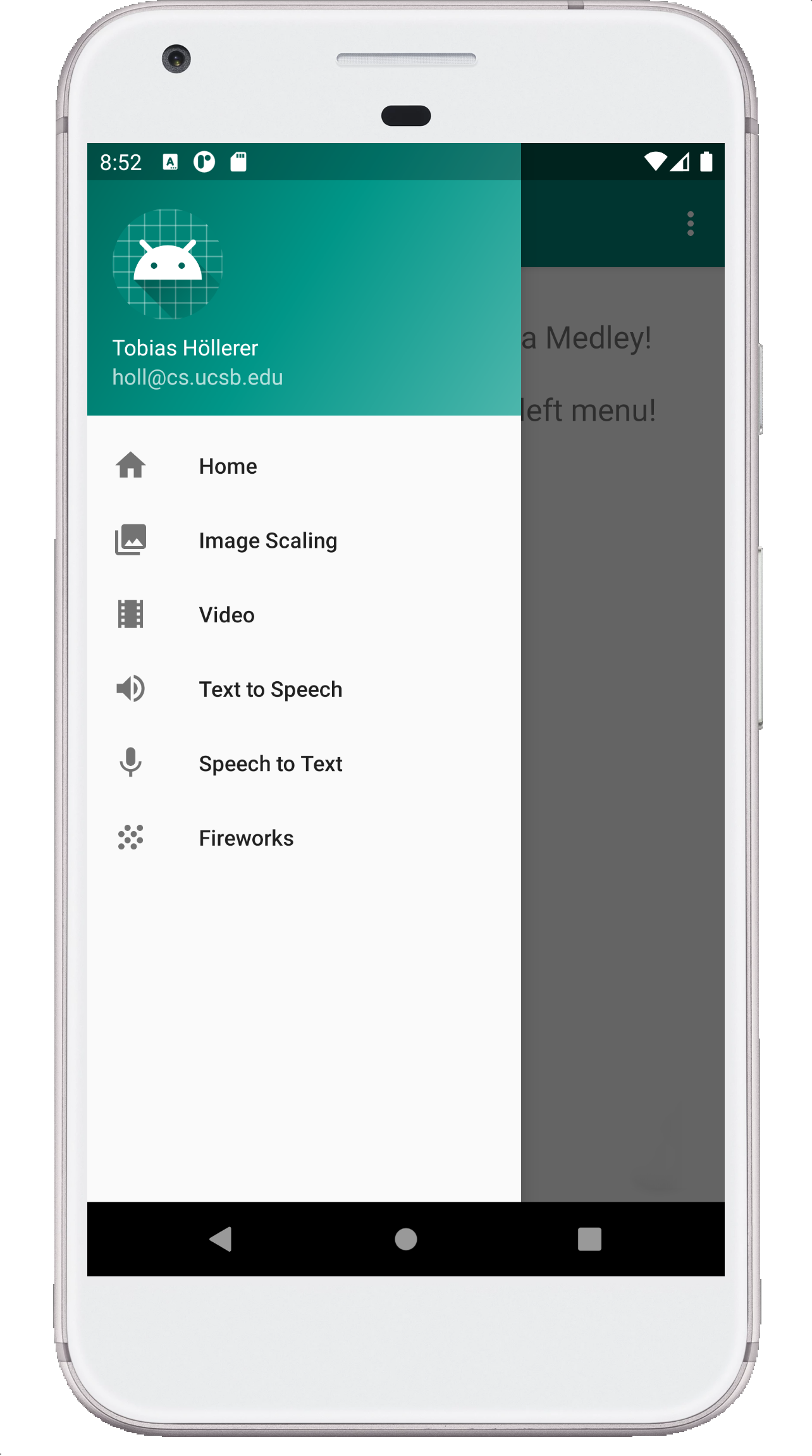

The main structure that you should follow is that of a "Navigation Drawer" skeleton, and five different fragments that are started from entries in the navigation drawer. The five fragments will implement the abovementioned functionalities, and they can be tested individually, and need not interact with each other, other than being started from the same framework.

We demonstrated a simple navigation drawer framework in Class/Discussion and introduced the Jetpack component coding paradigm. You should start with creating a new project in Android Studio.

Select the Phone and Tablet form factor, use API 23 (Android Marshmallow) as your minimum API (unless you need to go lower because of the physical device you are using) and then choose the Navigation Drawer Activity template. Use the following package name: edu.ucsb.cs.cs184.<your uname>.<your uname>mmmedley

If you use the latest version of Android Studio, this will generate a code skeleton with autogenerated Jetpack-compatible code for 3 repurposable generic fragments, labeled 'home', 'gallery', and 'slideshow'. It also includes a Floating Action Button (FAB), which will play a role in some but not all of the fragments you will implement. You should hide the FAB when it is not needed.

Your task is to repurpose all fragments but the home fragment, and add new fragments to create the 5 fragments described below, all demonstrating various Android multimedia features.

You should program these 5 fragments in adherence to the Android Jetpack philosophy, which we introduced through demonstration in Section from Wed, Apr. 27, and through H4: Jetpack ViewModel Chapters

The app should correctly handle orientation switches from Portrait to Landscape and back. State should be saved in all cases. Use the Android Jetpack ViewModel to achieve that, not Bundles, SharedPreferences, or the AndroidManifest trick. Implement state saving in accordance with the jetpack component architecture, ensuring that the ViewModel stays intact even if the fragment is destroyed, and letting observers retrieve the current information for every fragment anew.

In order to facilitate grading by the TAs, the following Log statements have to be added in your code to receive the full credit on state saving:

Log.i(“<class_name>”, “extra credit: <variable_name> updated”), where <variable_name> is the name of the MutableLiveData.

E.g. : Log.i(“FireworksFragment”, “mParticles updated”)

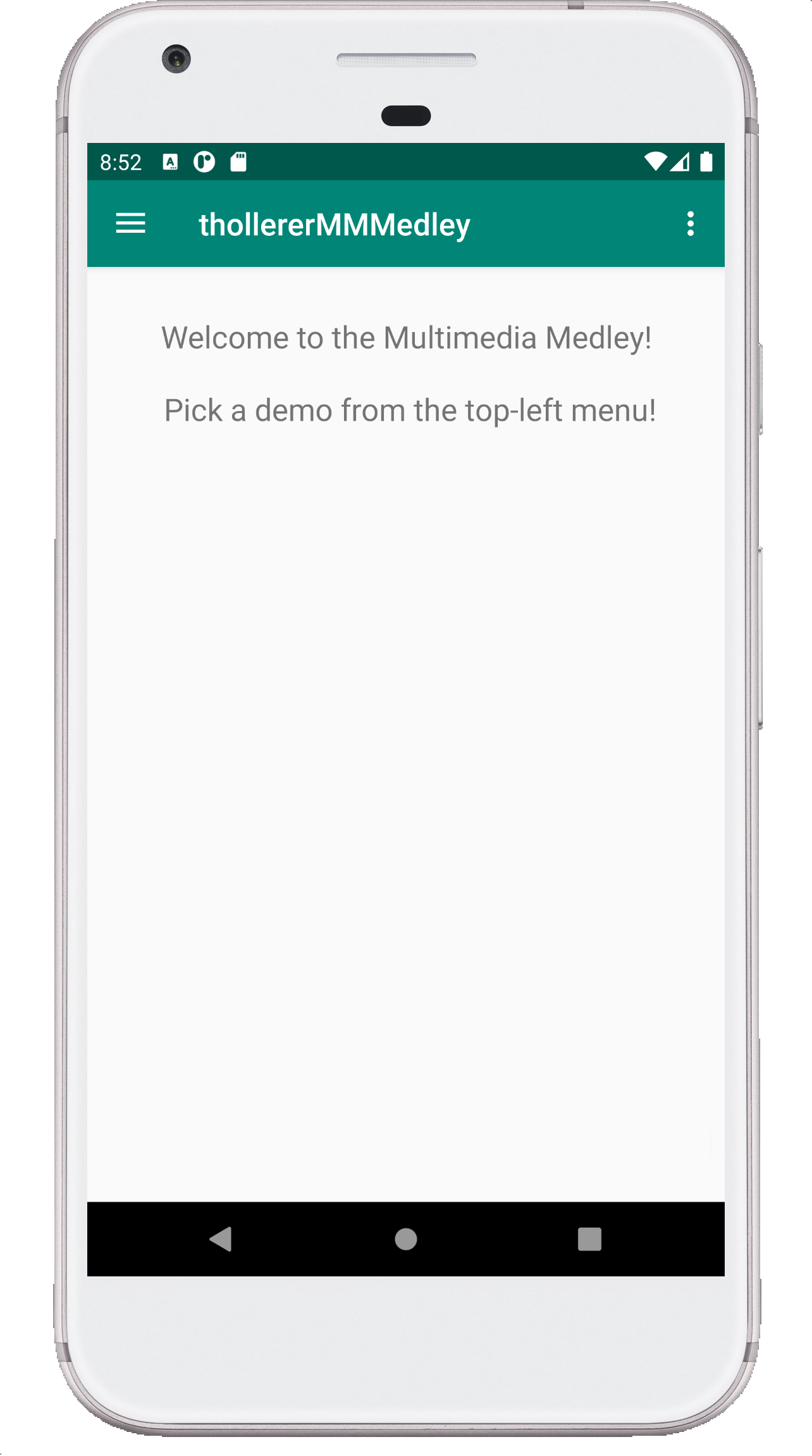

When the app is opened for the first time it should display an empty home screen with a welcome message and a navigation drawer with the following layout, using appropriate icons for Home, Image Scaling, Video, Text to Speech, Speech to Text, and Fireworks. Read up on icons in developer.android.com, and check out Material Design Icon examples.

When the user taps one of the navigation bar entries, the respective action is executed, as a new content fragment. One single fragment layout per each of the five sub-demos should be referenced in both the Portrait and Landscape views, so that when any changes in interface are to be made they have to be made in only one place.

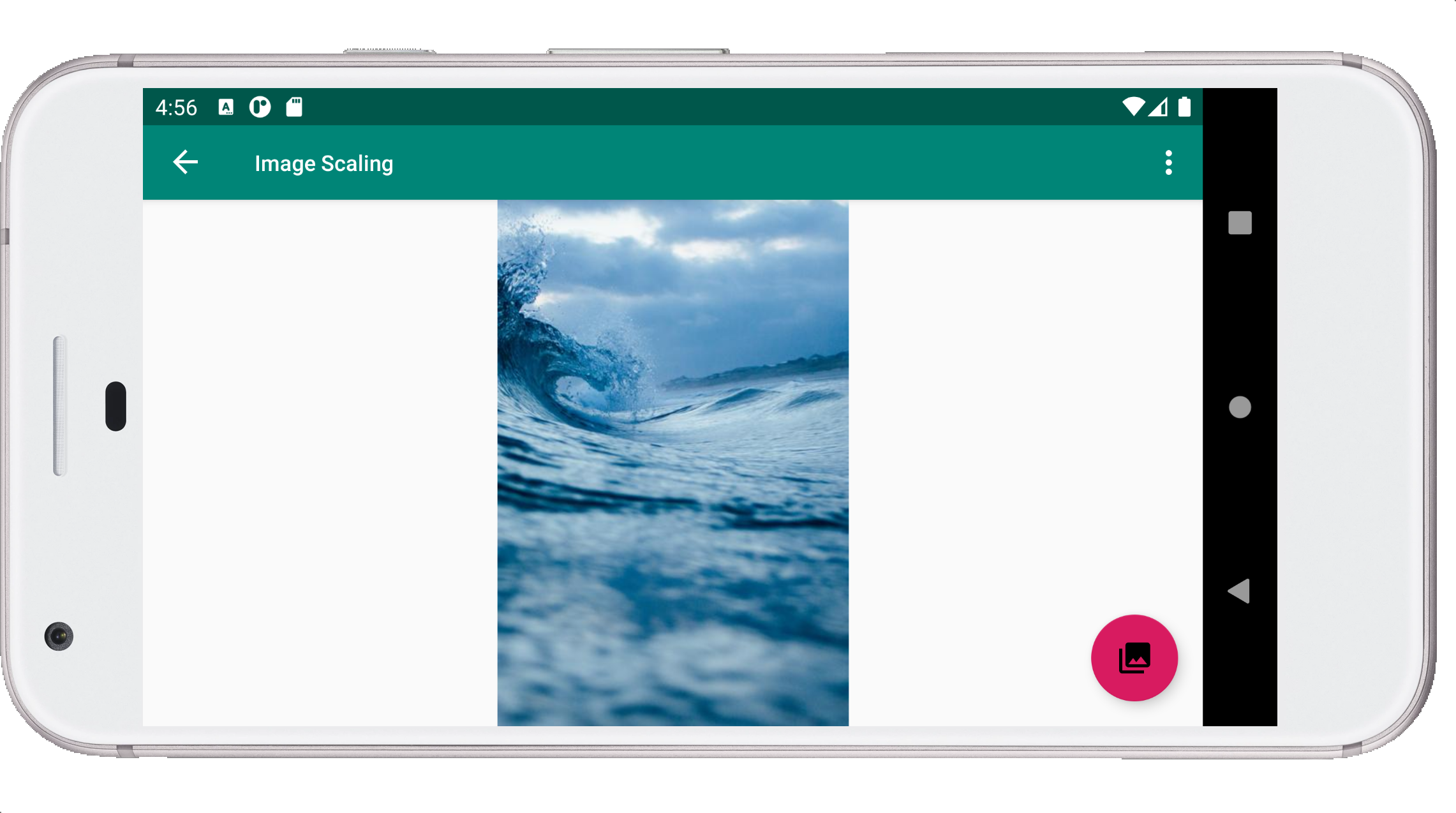

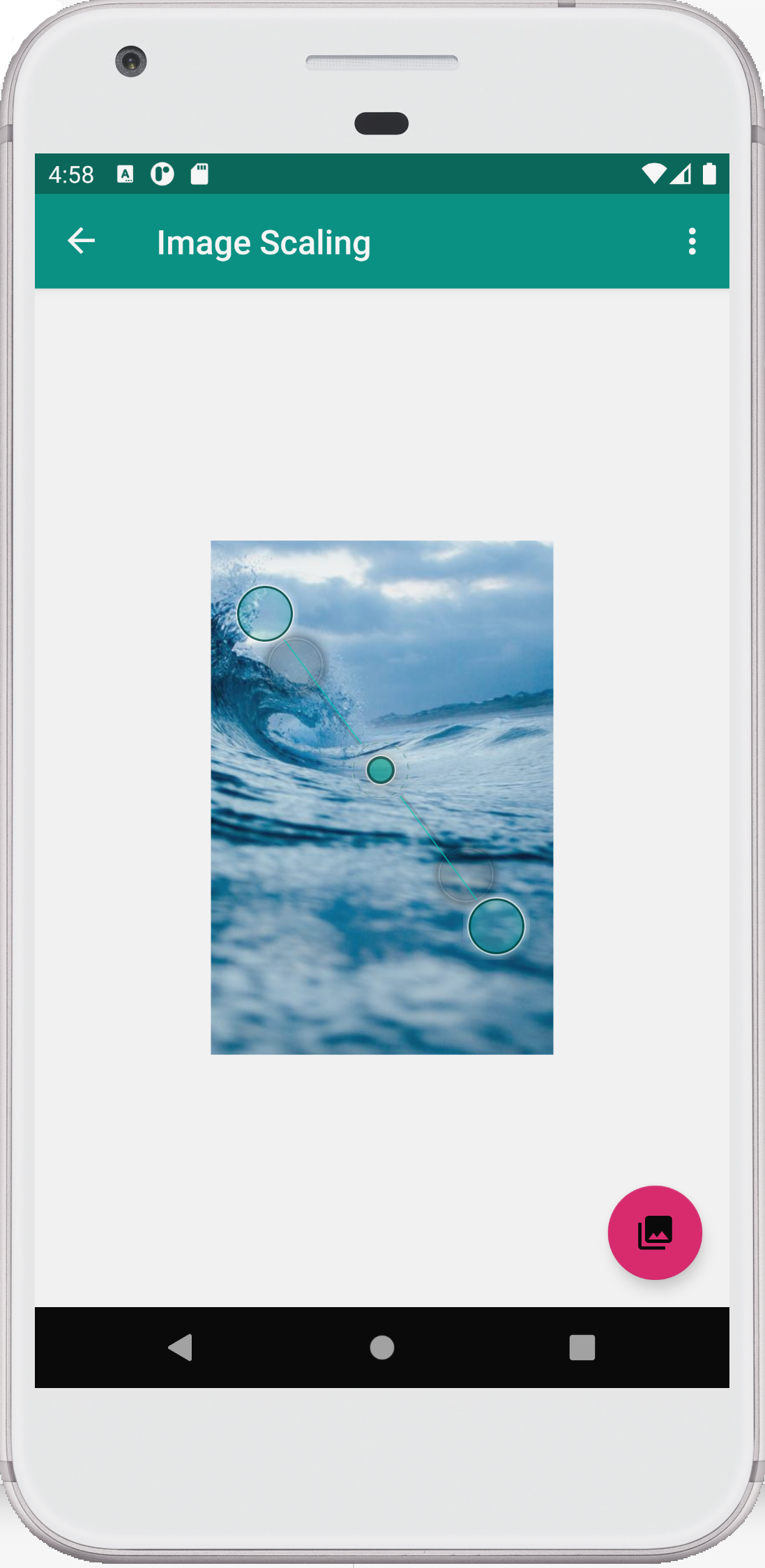

1) Image Scaling

For this Fragment, please implement image loading from a web service (picsum) and scaling via a pinch gesture (can still be done in the emulator). The Lorem Picsum site

provides a simple web API for retrieving random images. The following URL will for example call up a random image of size 400x600:

https://picsum.photos/400/600

The following URL will call up the specific 400x600 image with the id number 111:

https://picsum.photos/id/111/400/600/

We checked, and there are currently 1084 images of size 400x600 indexed in this service. You can make use of this fact for the assignment.

Loading images from remote sources without help can be a pain in Android development. You need to make the http request against the url, download the bytes of the image, create a Bitmap from those bytes, then render that BitMap in an ImageView. And all that takes time, so if you are not careful you freeze up your main UI thread and your app becomes unresponsive while the image is loading. In short, image loading is tedious, and also leaves room for tons of errors. Luckily, nowadays there are libraries that make it quite easy to do the job. We recommend using the Glide library, but we have also played with Picasso and Coil and you are free to use anything you like as long as you document it in your README file.

We will give a demo on adding Glide to an Android project in class.

On startup a random image should fill the screen (as much as the aspect ratio allows, no cropping).That should be true also after orientation change:

With a Pinch Gesture (see handout H10), you should be able to resize the image, and the percentage of the image should be maintained after orientation change:

The FAB here will load a new image when tapped, which should keep the old scale information.

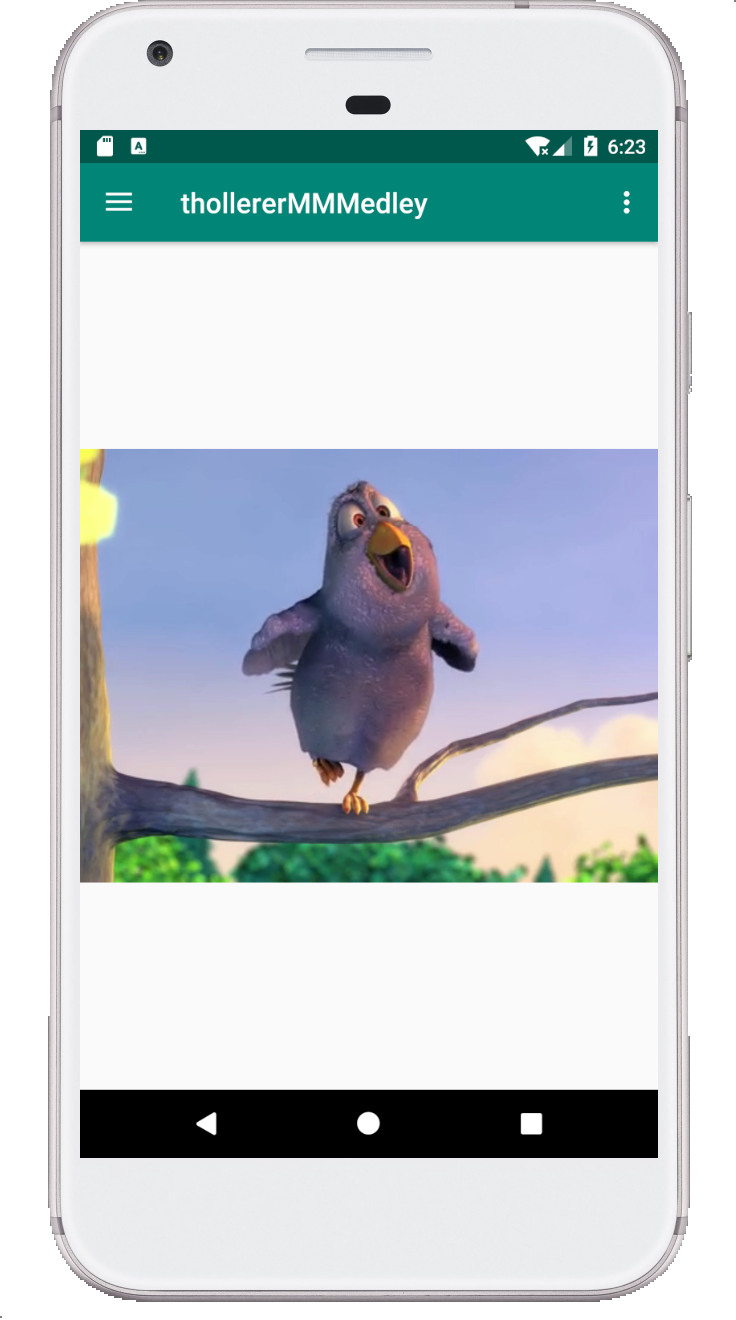

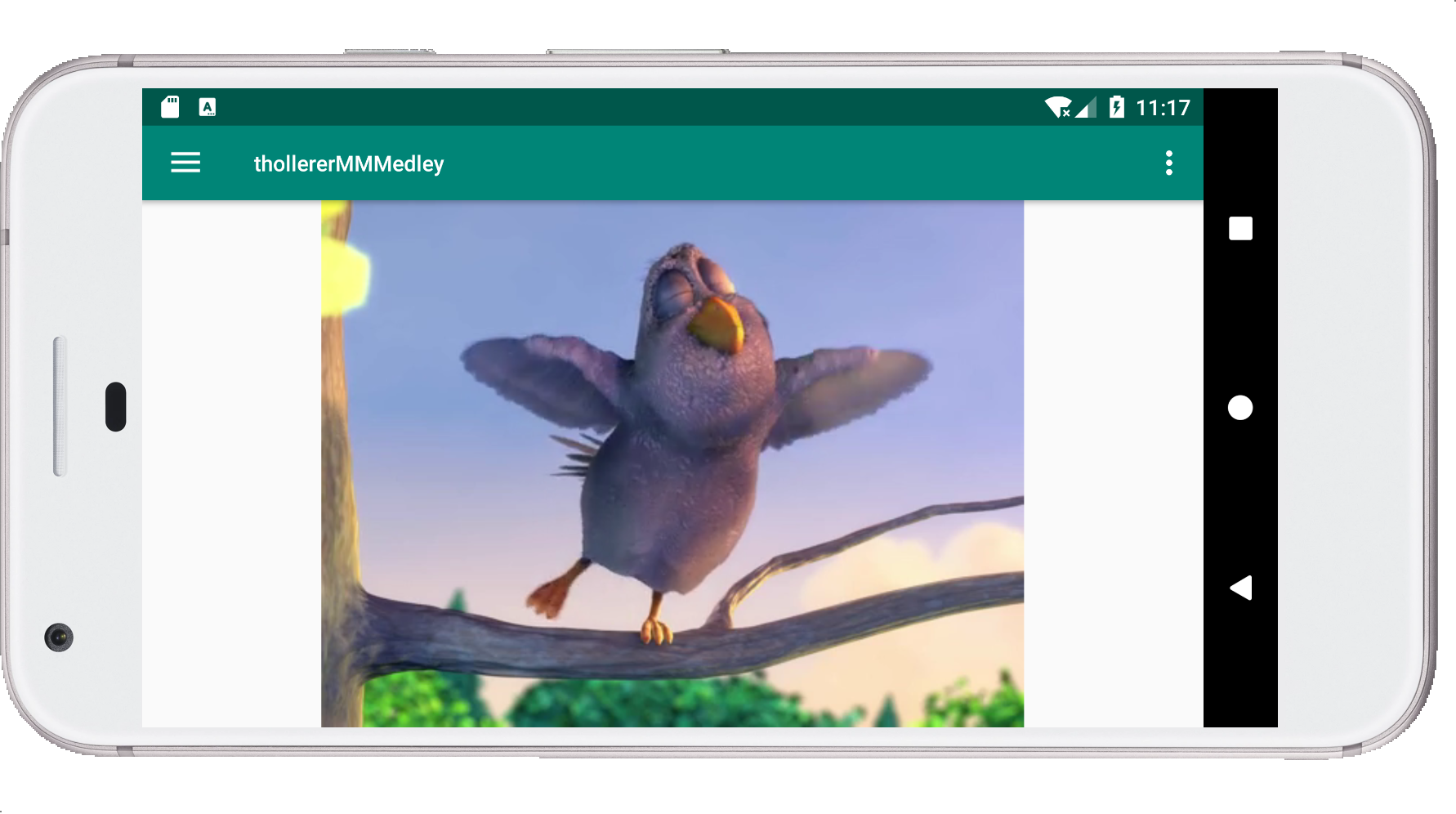

2) Video Playback

Video playback simply plays back a short video clip, but instead of retrieving it from the web, you will include it as a resource. Start playing the short video clip directly on tapping the Video entry in the navigation drawer. Here is the video file that should be put into the 'raw' folder: bigbuck.mp4. Use a VideoView widget to play this snippet. This tutorial gives an example in Java. You'll do it in Kotlin. There could possibly be problems with this approach when media files are too big, but the size of the audio and video file linked here should not cause any problems.

3) Text to Speech

For the TextToSpeech demo, we display a layout that uses an EditText widget (Plain Text component in Text Field section of the AndroidStudio UI Builder Palette). The FAB will trigger the speech output of the current sentence. The EditText widget is pre-populated with the text hint phrase "Type something you want me to say!", to be defined in strings.xml. The EditText should be multi-line. This is what it should look like:

When the FAB is tapped, Androids speech engine will read out loud whatever has been entered in the EditText widget.

4) Speech to Text

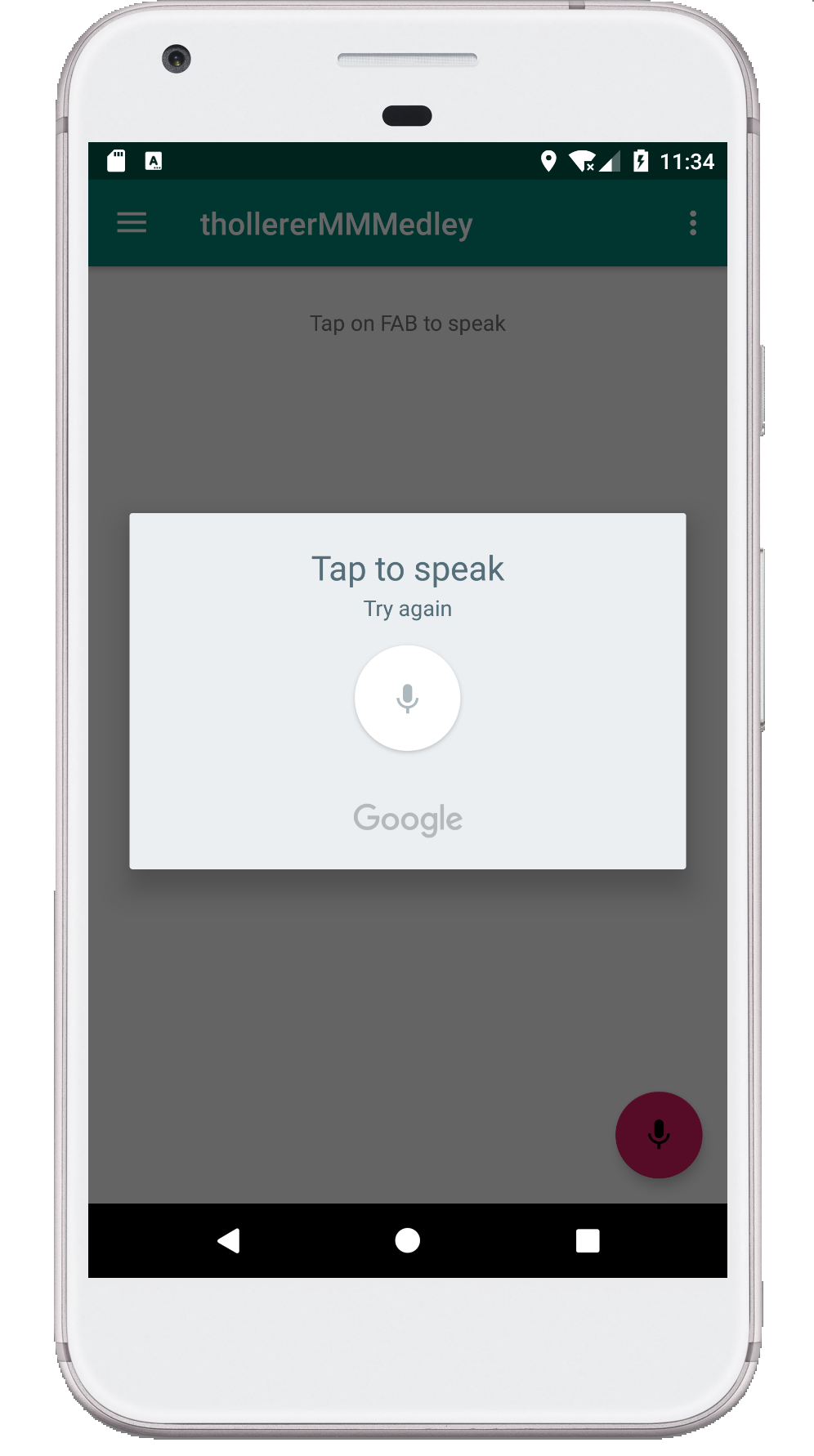

The SpeechToText layout consists of a TextView (initially empty) above a text prompt asking the user to tap the FAB (which should show a mic icon in this fragment).

When the FAB is pressed, the built-in Android activity for capturing speech into text is triggered:

and on Activity result, the spoken text is displayed in the TextView.

5) Custom Animation: Fireworks

You will program a custom animation consisting of red circles making an "explosion" animation on touch. No FAB functionality is needed here, so the FAB should be hidden.

As discussed in class of Thu, Apr. 28th, please use this helper class to set up a thread that updates a given function at a requested fps frame rate: AnimationThread.kt

There should be some random ball movements to start with, which has to be slow enough to test orientation changes while the particles are moving.

Have fun with this assignment!

Please look at the following concepts, which are critical to a successful solution to this programming exercise:

- Fragments API Guide (see also handout H7, and slides S8).

- The Jetpack Components, as described in handouts H3 and H4 including including ViewModel, LiveData (and working knowledge of the Navigation principles used in the Android Studio template code). Also the new way for State Saving...

- Speech handling (slides S10)

- Multitouch Gestures (handout H11)

- 2D Graphics (slides S9)