CS 291A, Assignment 4,

Option Augmented Mosaicing

ChangeLog: ...

For this assignment, you will write an OpenCV application to produce a mosaic from on video streams. A mosaic is a way of stitching together images which are related either by rotation or by view of a planar surface. Both transformations can be represented by a 3x3 homography matrix.

1. Video Display

The first step is to implement a basic video viewer which grabs frames from either a camera or a video file. Use the OpenCV VideoCapture class for this purpose. Your application should take a single optional argument from the command line which specifies the path to a video file. If the argument is omitted, then the application should start video capture from your camera.

Using OpenGL and GLUT, your application should display video frames as they are captured. You can re-use the basic GLUT code you developed in the previous assignment.

Proper OpenGL code should not perform computation in the display() function. Computation should be performed in the idle() function (specified with glutIdleFunc() ). The display() function should only redraw elements to the screen. For your application, you should grab a frame from the camera or video file in the idle() func, store it in a global structure, and draw the image in the display() function.

2. Feature Detection

To establish correspondence between consecutive video frames, we will detect and match keypoints. Keypoints are points in the image which are expected to be reliably matched, such as corner points.

There are many different keypoint detectors implemented in OpenCV. We will use the aptly-named FAST detector, implemented in the cv::FastFeatureDetector class. More information on FAST can be found here:

http://www.edwardrosten.com/work/fast.html

Write code to run the FAST detector on each frame, using the default parameters. Display the points on the video frame as yellow dots.

3. Frame-to-frame Point Tracking

Now, you need to track the points detected in one frame to their new locations in the next frame. There are many ways to do this as well. A classic approach is tracker Kanade-Lucas-Tomasi or KLT tracking. This method iteratively updates the point locations to estimate a warp function which describes the transformation from the first frame to the second, and operates over an image pyramid. The original paper is here:

An Iterative Image Registration Technique with an Application to Stereo Vision.

This technique is implemented in OpenCV function cv::calcOpticalFlowPyrLK. The function returns a vector which marks which points were successfully tracked. Remove the features which could not be tracked from your data structure.

Display the feature tracks by drawing a yellow line from the previous location to the current location on the video frame. Toggle between this view and the point view using the 'f' key:

4. Homography Estimation

Now you will try to find a homography which explains the motion observed between the two video frames. This can be accomplished with the OpenCV function cv::findHomography. Use the RANSAC method to separate inliers from outliers, and use a maximum reprojection error of 1 pixel.

Maintain a homography which relates the first frame to the current frame. This is produced by chaining together the frame-to-frame homographies, i.e. multiplying them together: H = Hn*Hn-1*...*H1 . This gives a homography such that for points x1 in the first frame and xn in the current frame, xn = H*x1. The inverse homography H-1 can be used to transform points in the current frame to the first frame. (Note: findHomography() returns a double precision matrix, type CV_64FC1).

Display the inliers and outliers when the 's' key is pressed, using green for inliers and red for outliers.

5. Adding annotations

Now implement the ability to add annotations to the video. When you click on the video frame, that spot should be marked in the reference coordinate system. Using the current homography, figure out how to calculate the coordinates of the mouse in the reference frame of the first image. Then at each subsequent frame, project the point to its new location in the current frame according to the update homography. The point should stay "fixed" to the place in the scene where you clicked.

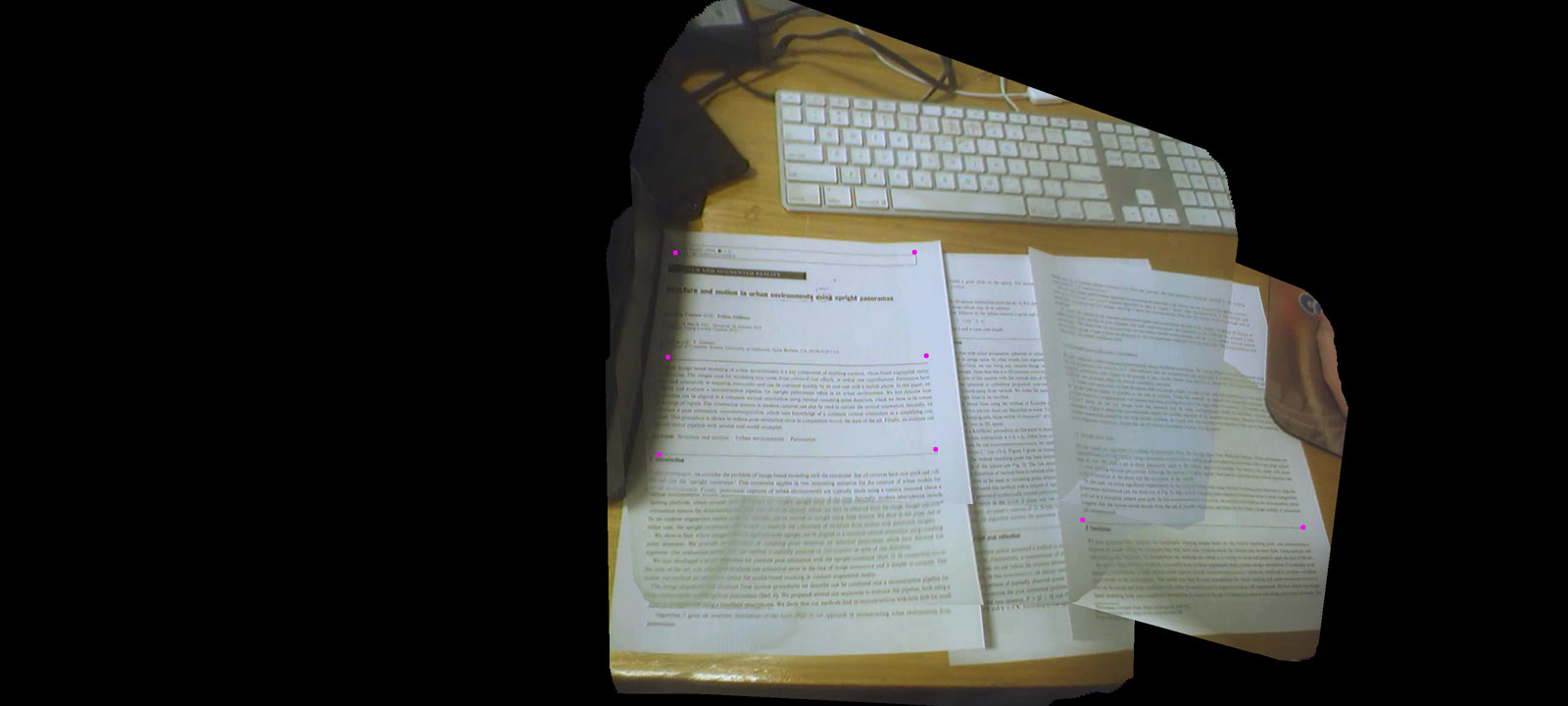

Maintain a list of point annotations, and display them at their projected locations as purple dots of size 10.

6. Stitching together the mosaic

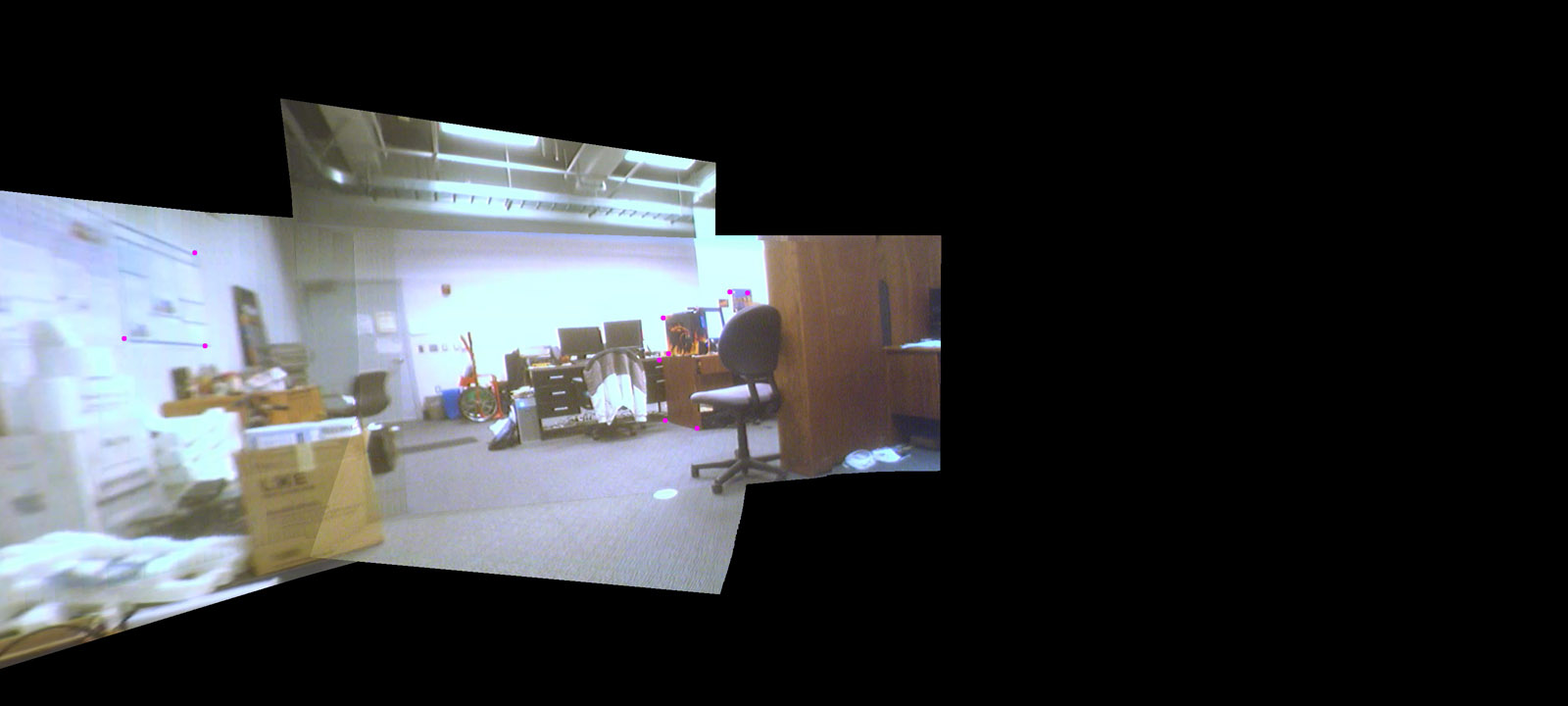

The last part of the assignment is to stitch all the video frames together into the mosaic image, based on the calculated homographies. Create a mosaic image which has five times the width of the video frame, and three times the height, filled with black pixels.

At each frame, use the homography to warp the current frame into the mosaic image. There is an OpenCV function to perform the image warping. You will use the current homography as well as an 3x3 offset matrix to move the origin to the center of the mosaic image. The warp should be such that the first frame of the video is copied to the center of the mosaic image.

Also, draw the annotation points in the mosaic image as filled purple circles with a radius of ten pixels.

After the last frame of the video, write out the mosaic image to a file called "mosaic.jpg".

Note that the method also works for flat surfaces with arbitrary camera motion: