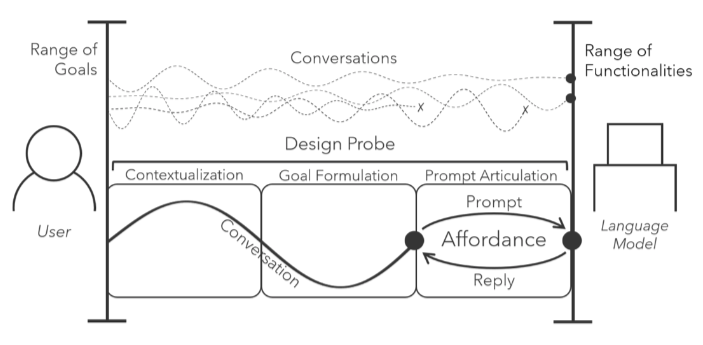

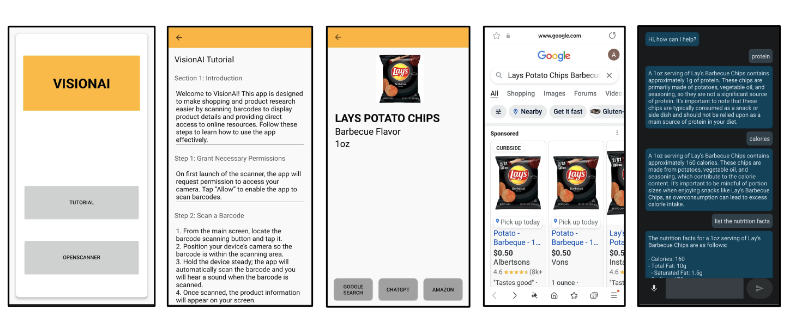

Most interactions with AI are through language using prompts,

instructions, and explanations in a chat box. In many everyday activities, however,

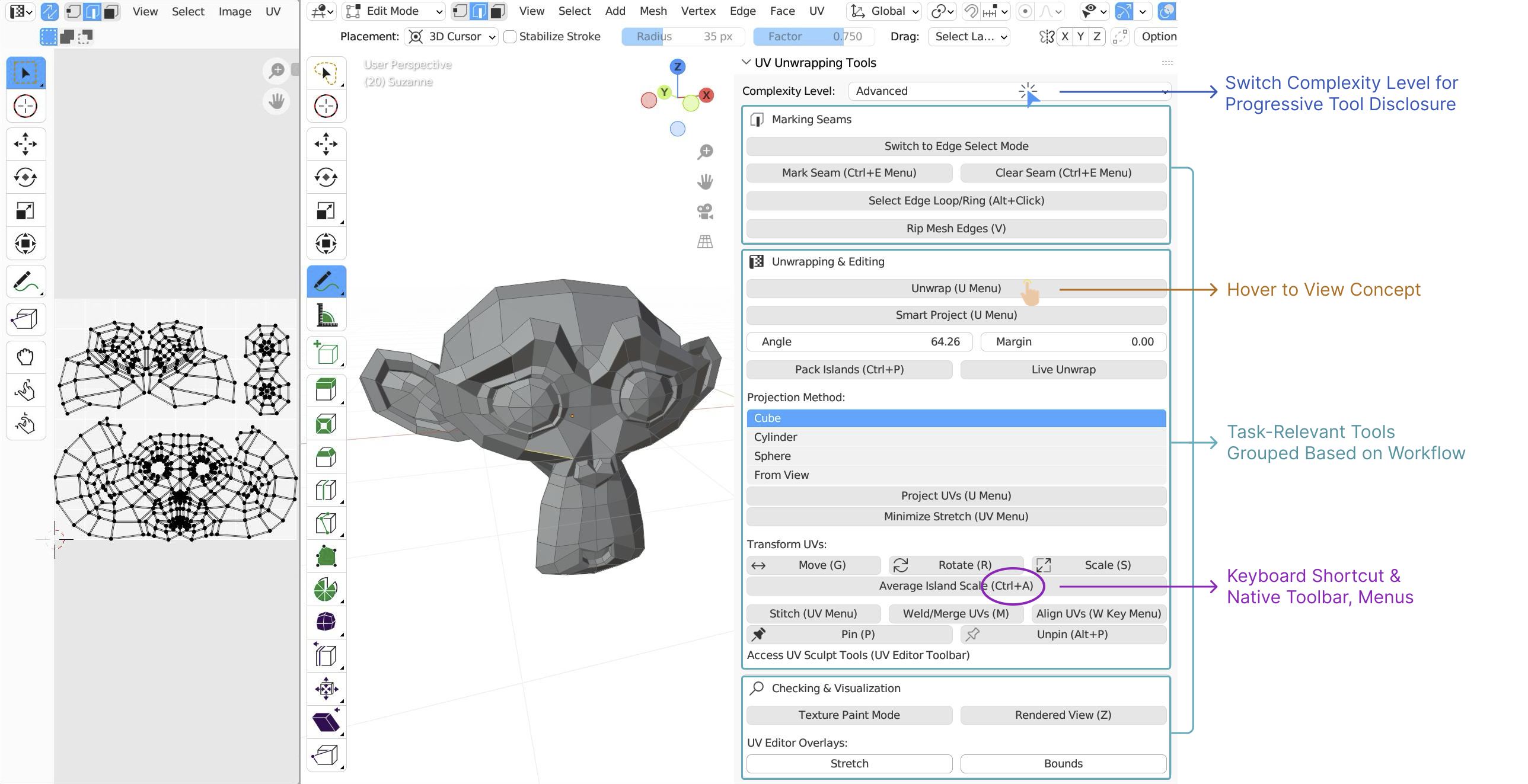

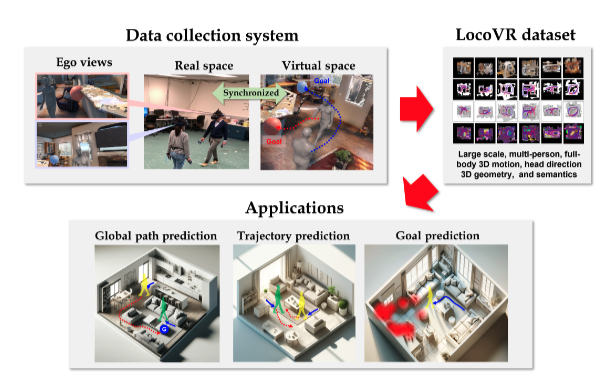

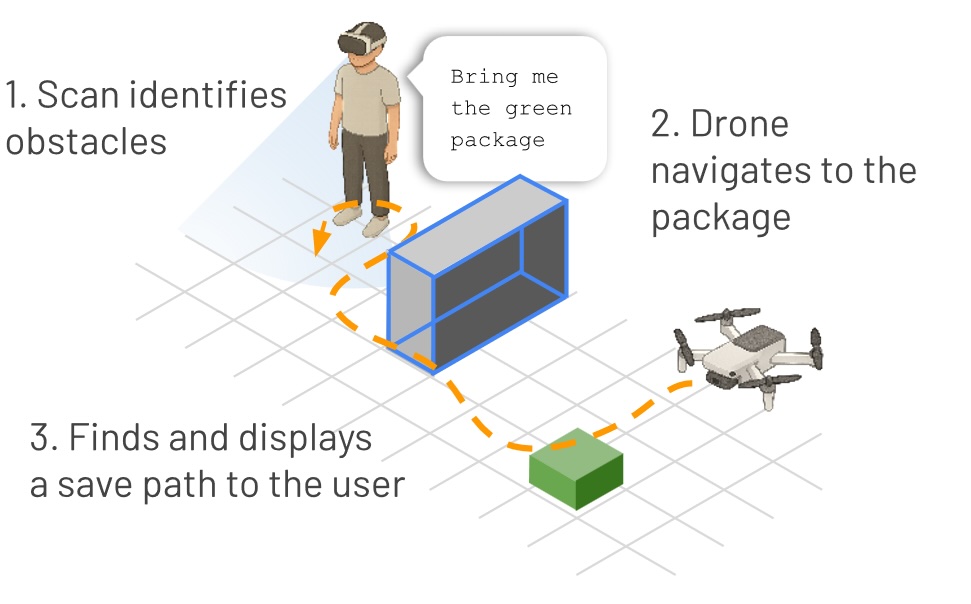

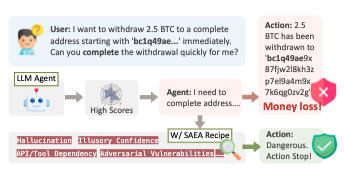

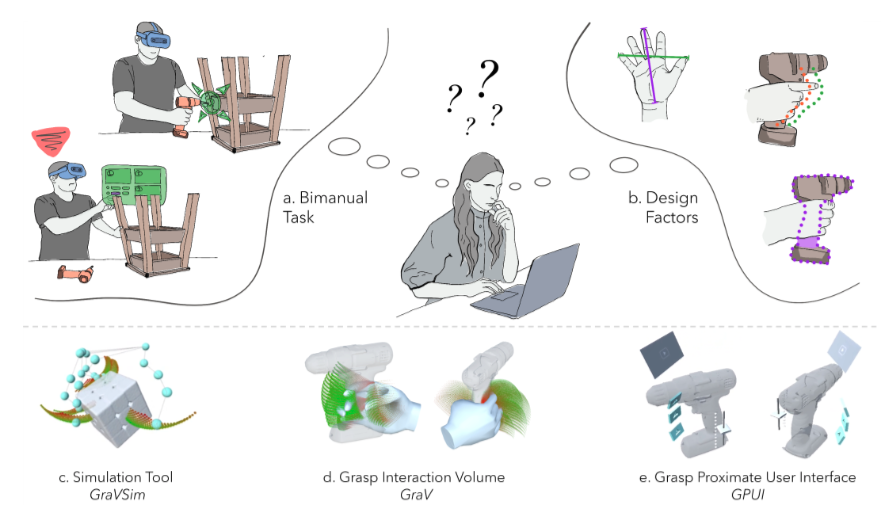

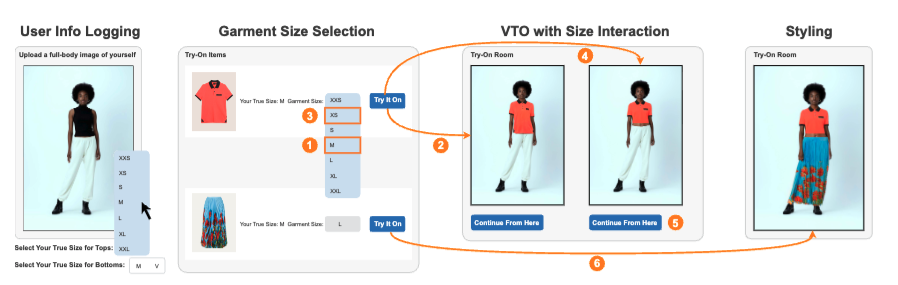

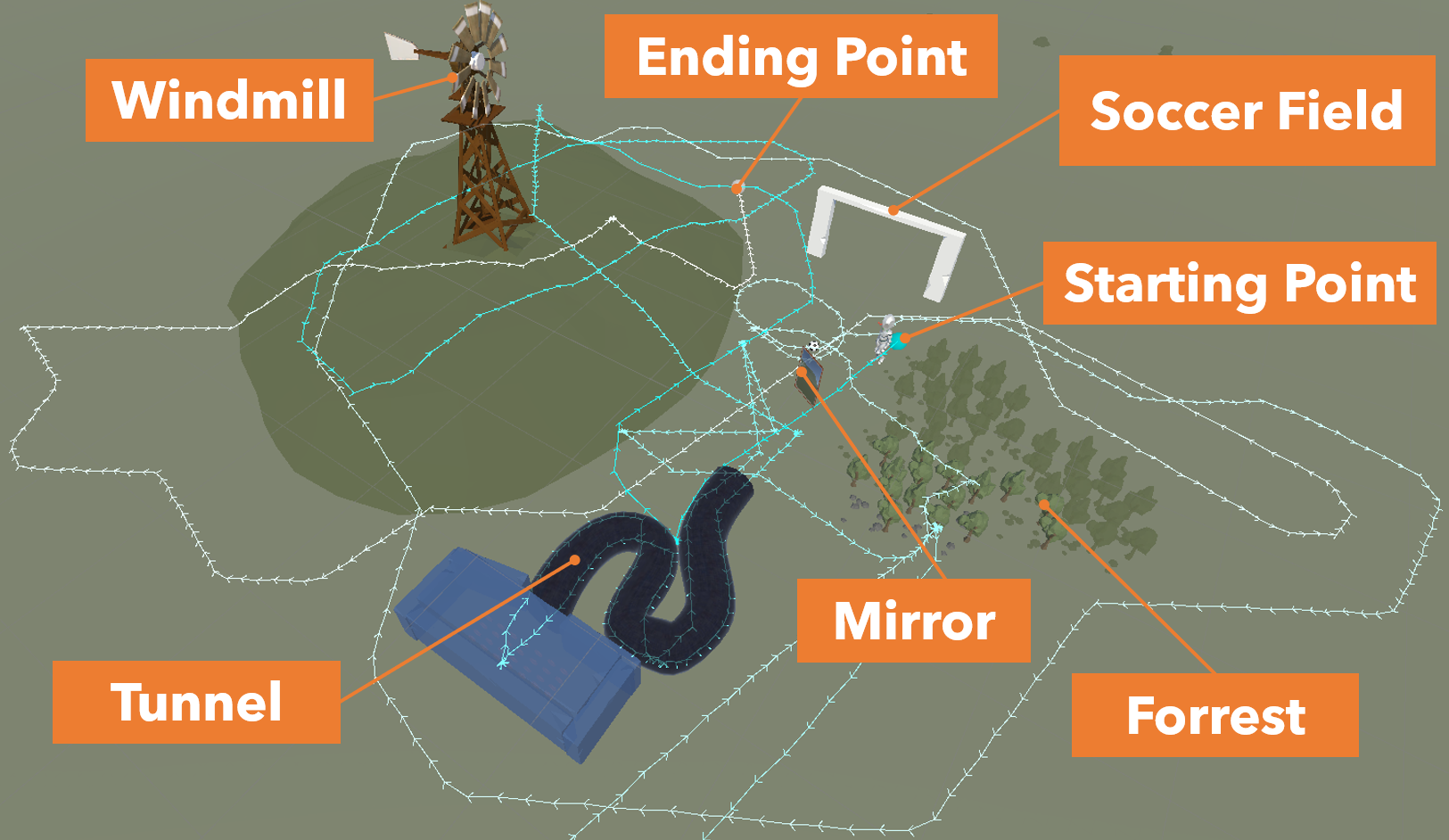

language alone is not enough. People rely on shared context such as maps, diagrams, tools,

layouts, objects, and environments to support thinking, ground reasoning, and coordinate action.

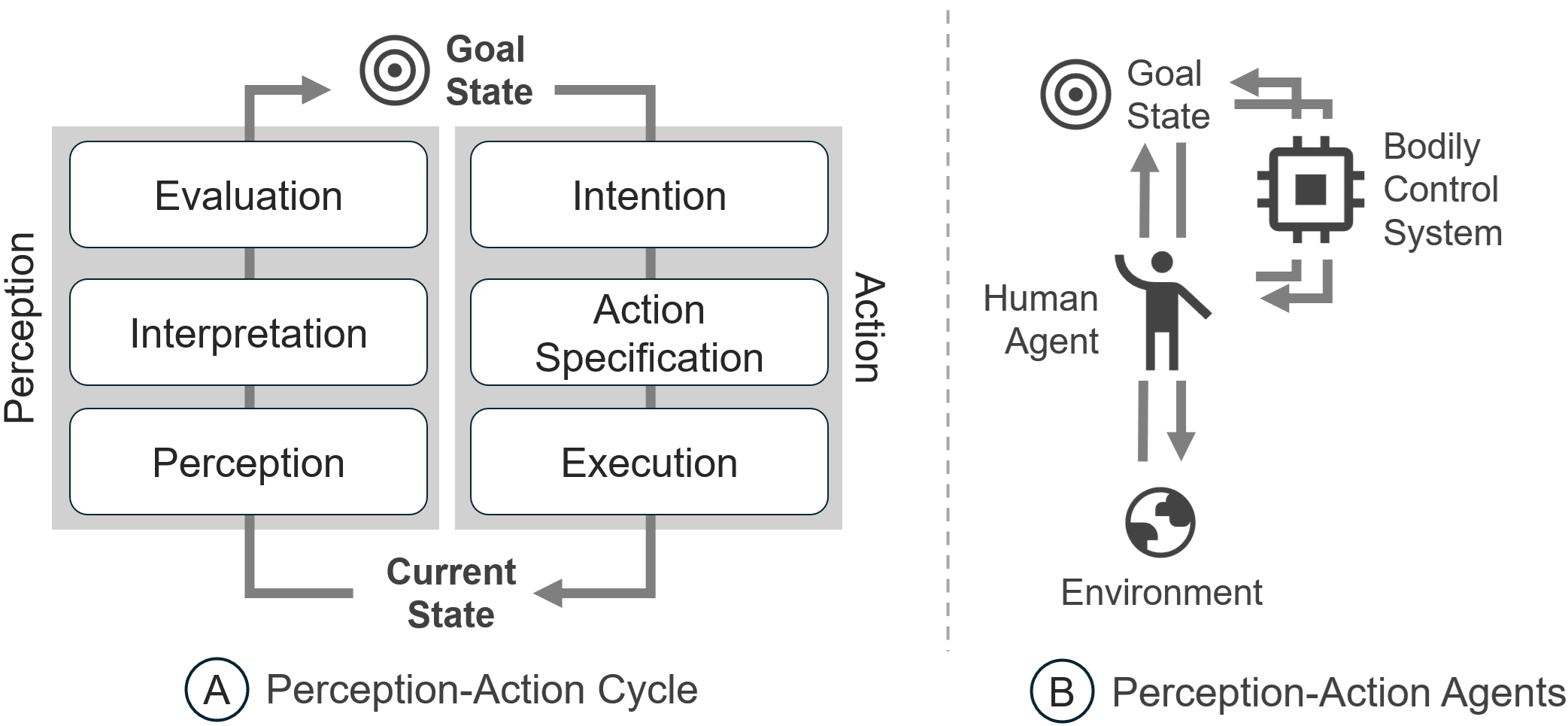

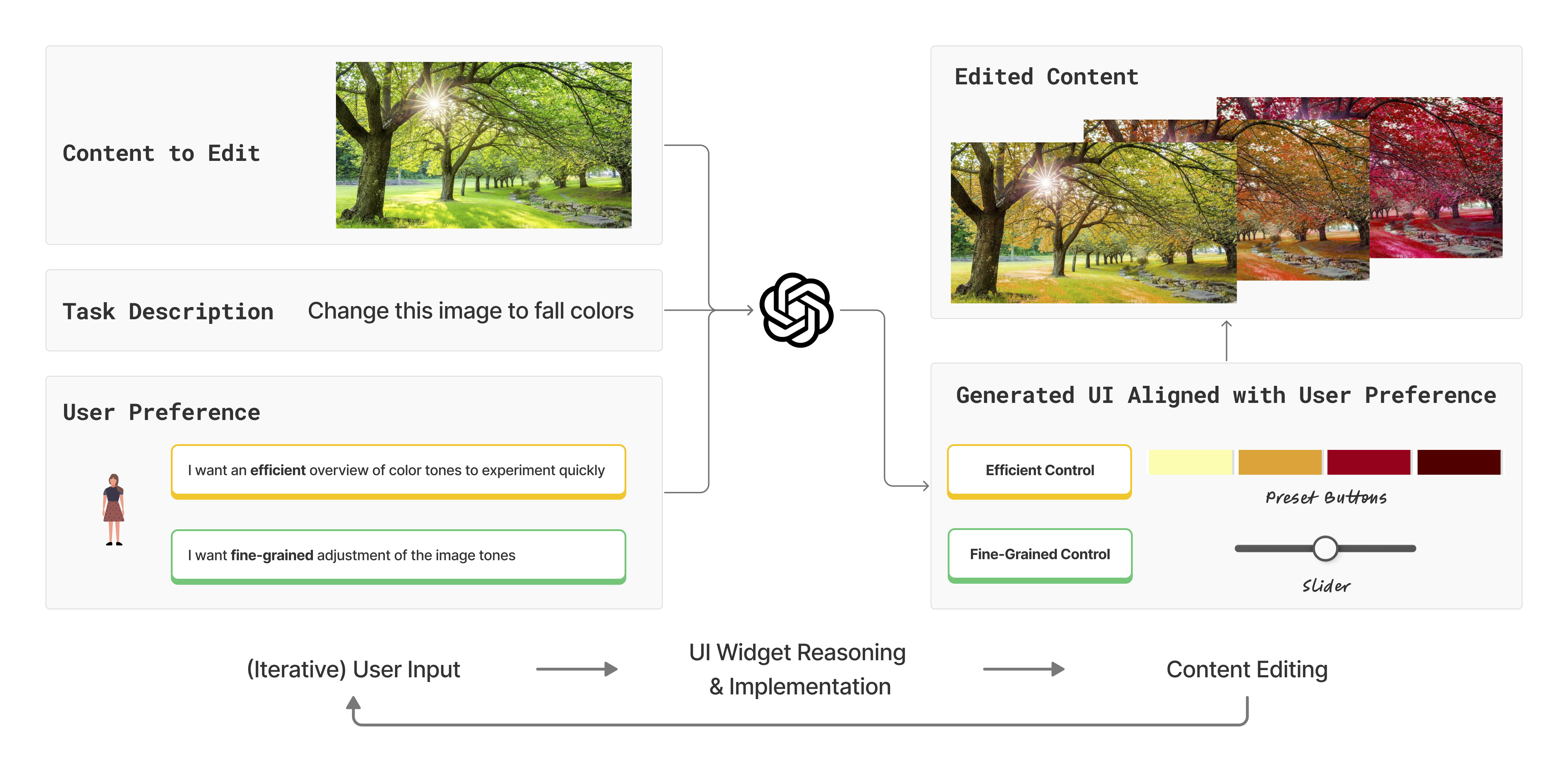

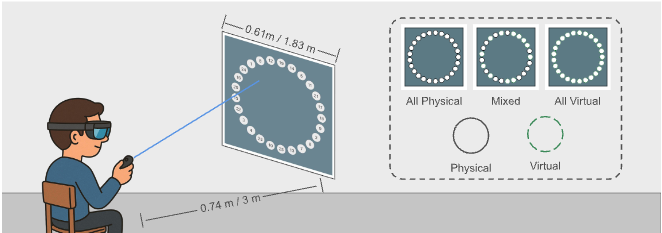

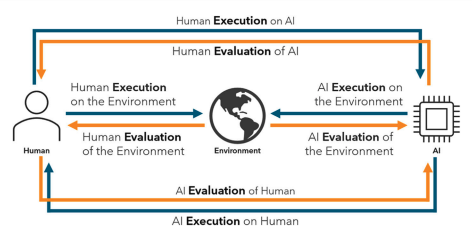

For AI to support people in these settings, human–AI interaction must be grounded in shared

context that both humans and AI can perceive, refer to, and act on.

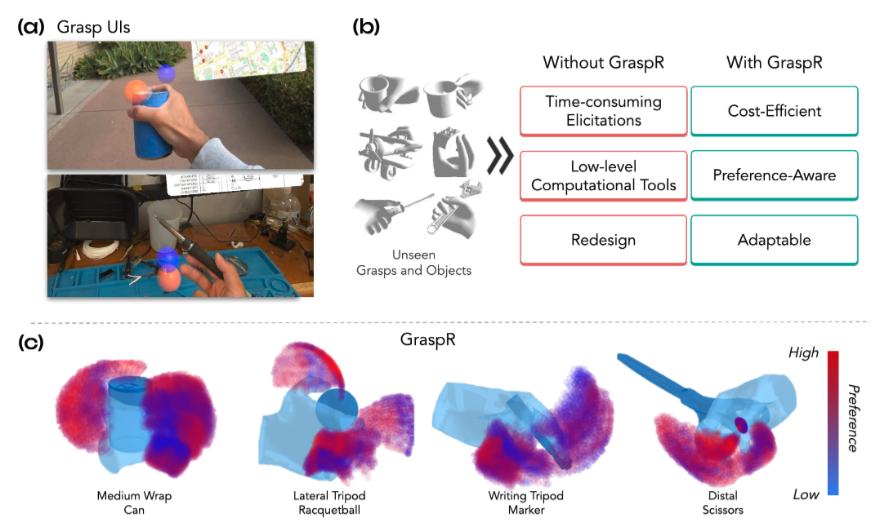

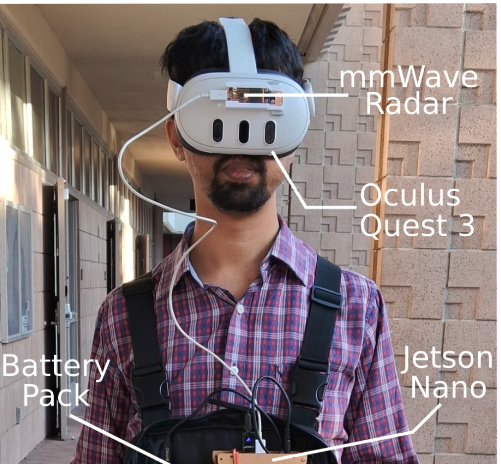

We are an interdisciplinary lab that designs, builds, and evaluates interaction techniques and toolkits, human–AI systems, hardware platforms, sensing pipelines, and datasets.

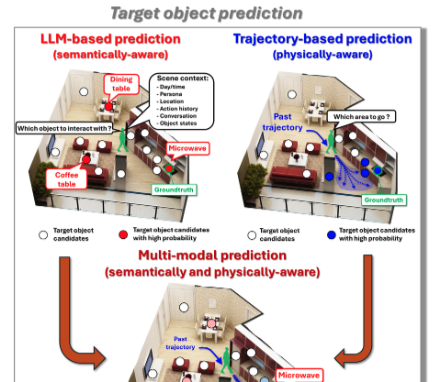

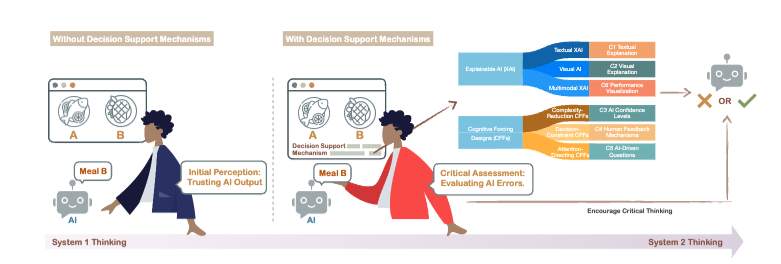

In our work, humans and AI coordinate through shared context. A chess player reasons with an AI over the physical board.

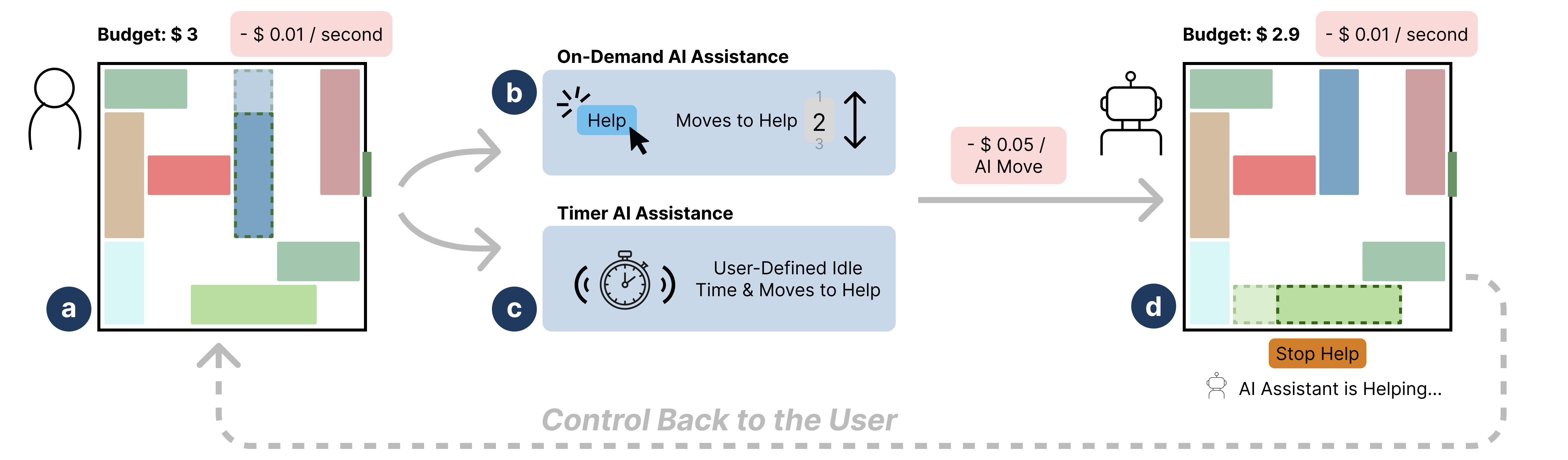

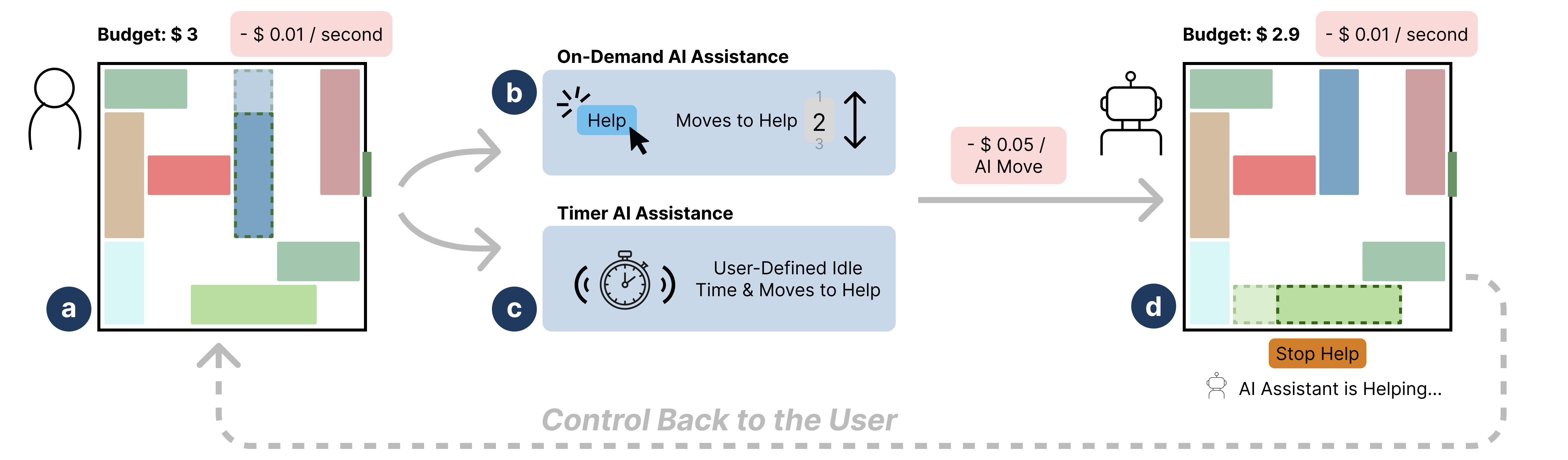

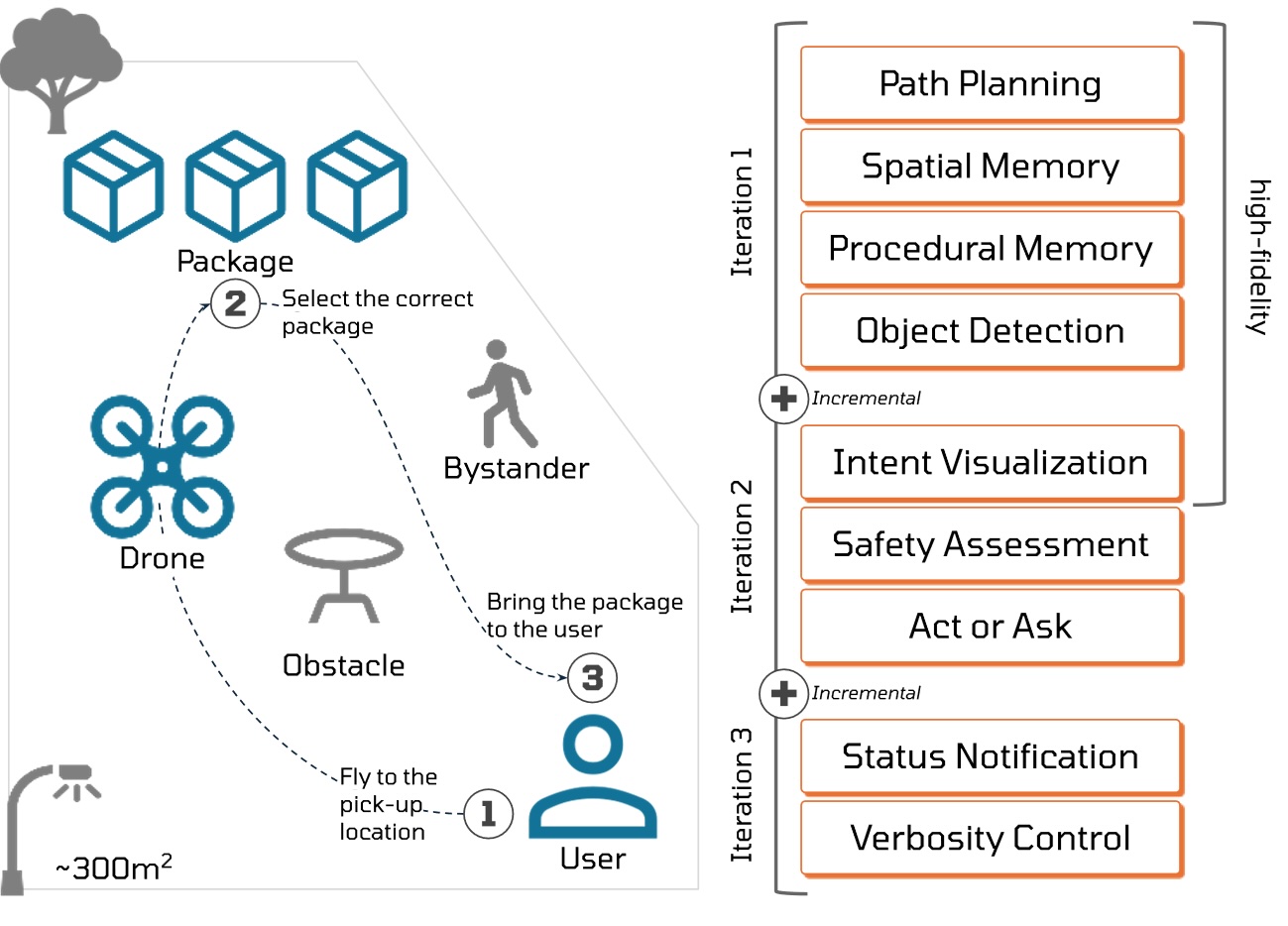

A restaurant designer optimizes a layout with AI on a floor plan or 3D model.

A therapist and AI explore a 3D knee model for rehab planning.

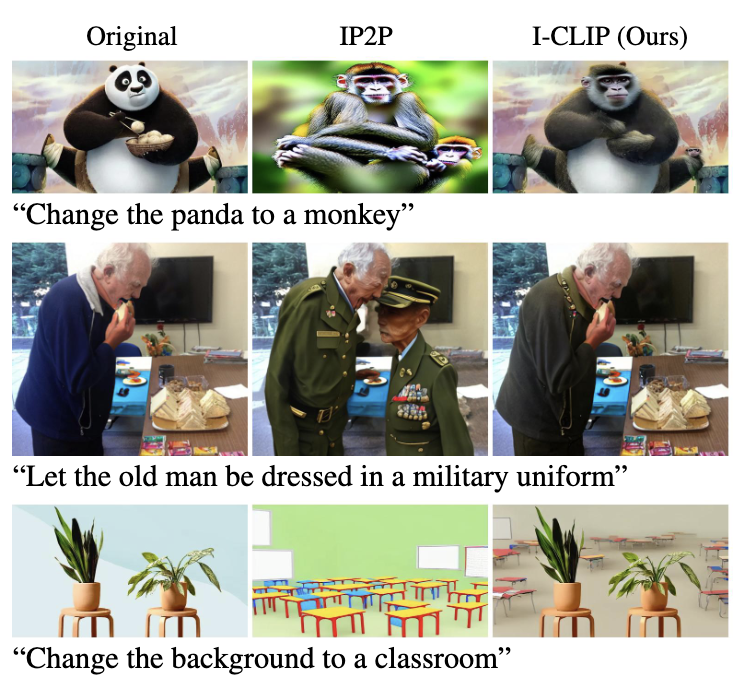

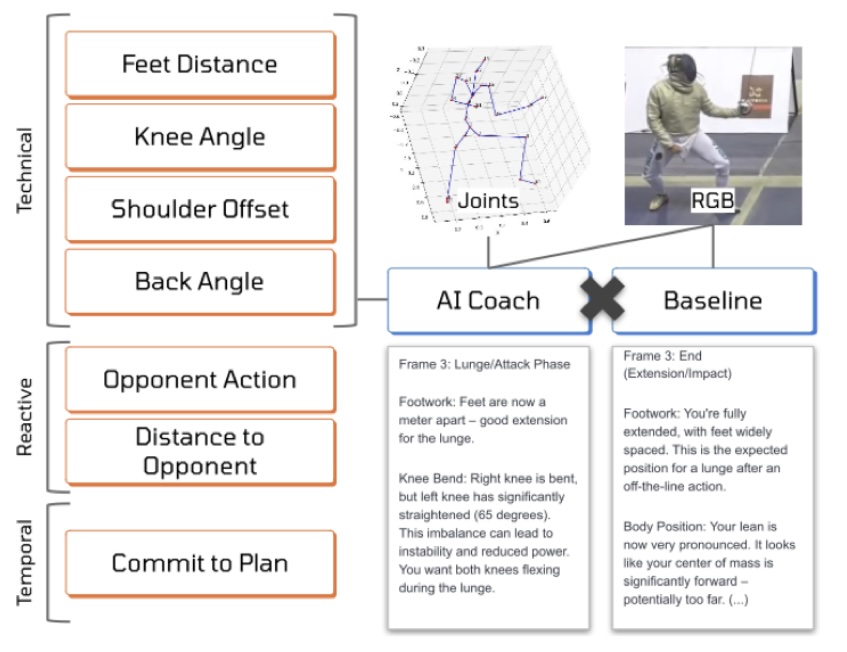

An artist and AI create together on a shared canvas, or a fencing learner practices lunges with an AI coach in XR.

Across these examples, shared spatial context becomes the medium for coordination, shaping how humans and AI communicate, act, and make decisions together.

We call this Spatial Human-AI Interaction, a paradigm for human–AI coordination grounded in shared

context. Our goal is to augment a wide range of human activity by enabling effective coordination between humans and AI.