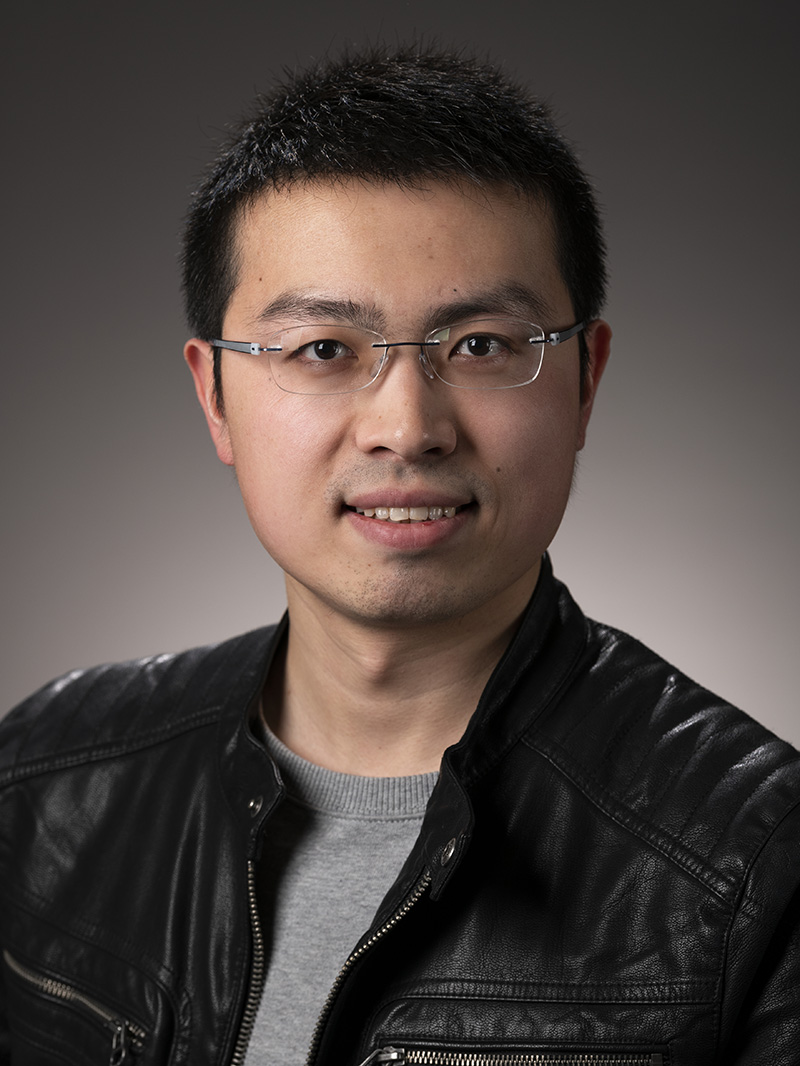

Lingqi Yan (闫令琪)

Assistant Professor

Department of Computer Science

University of California, Santa Barbara

2119 Harold Frank Hall

Santa Barbara, CA 93106

lingqi@cs.ucsb.edu

Curriculum Vitae [Aug 2019]

Latest News

- [Dec 2019] Pradeep and I have co-hosted the 2nd SoCal Rendering Day in UC Santa Barbara!

- [Jul 2019] I have two papers accepted to SIGGRAPH Asia 2019.

- [May 2019] I have one paper accepted to EGSR 2019.

- [May 2019] I am glad to receive the Outstanding Doctoral Dissertation Award at this year's SIGGRAPH!

- [Mar 2019] I have two papers accepted to SIGGRAPH.

- [Dec 2018] I visited top universities and research labs (THU, PKU, USTC, ZJU, MSRA, NJUST, BUAA) in China and gave talks on next generation rendering. show more / less...

- [Oct 2018] I attended SoCal Rendering Day at UCSD.

- [Jul 2018] I have joined UC Santa Barbara as an Assistant Professor!

- [Jun 2018] My Ph.D. dissertation is accepted by the administrators at UC Berkeley. Congrats Dr. Yan!

- [Apr 2018] I received C.V. Ramamoorthy Distinguished Research Award for "outstanding contributions to a new research area".

- [03/23/18] Our paper Rendering Specular Microgeometry with Wave Optics is accepted by SIGGRAPH 2018!

A Short Bio

I am an Assistant Professor of Computer Science at UC Santa Barbara, co-director of the MIRAGE Lab, and affiliated faculty in the Four Eyes Lab. Before joining UCSB, I received my Ph.D. degree from the Department of Electrical Engineering and Computer Sciences at UC Berkeley, advised remotely by Prof. Ravi Ramamoorthi at UC San Diego. During my Ph.D., I worked at Walt Disney Animation Studios (2014), Autodesk (2015), Weta Digital (2016) and NVIDIA Research (2017) as an intern. Earlier, I obtained my bachelor degree in Computer Science from Tsinghua University in China in 2013, advised by Prof. Shi-Min Hu and Prof. Kun Xu.

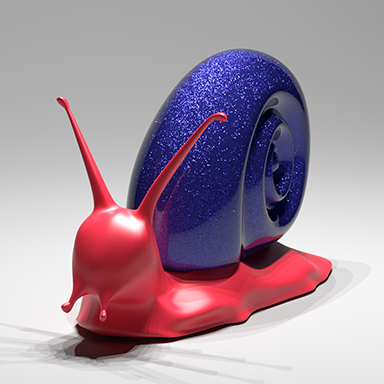

Research

My research is in Computer Graphics. During my Ph.D. career, I mainly aimed at rendering photo-realistic visual appearance (a.k.a. hard-core graphics) at real world complexity, building theoretical foundations mathematically and physically to reveal the principles of the visual world. I have brought original research topics to Computer Graphics, such as detailed rendering from microstructure, and real-time ray tracing with reconstruction. And I took the first steps exploiting Machine Learning approaches for physically based rendering.

As a young Computer Graphics researcher, my dream is to present people an interactive computer-generated world to live in, just like the ones in the movies The Matrix and Ready Player One. To achieve that, I come up with my own Rendering Equation, as you can see above. I am actively and prudently looking for outstanding students (details and FAQs) to work on related research topics, including but not limited to physically-based / image-based rendering, real-time ray tracing and realistic appearance modeling / acquisition. Let's work together, not to change the world, but to create one.

I am fortunate and proud to work with these phenomenal students:

Current PhD Students: Yaoyi (Elyson) Bai, Yang Zhou, Shlomi Steinberg

Current MS Students: Jinglei Yang

Alumni: Lei Xu (MS, now at Microsoft)

Teaching

| Term | Course | Location | Time |

|---|---|---|---|

| Spring 2020 | CS291A: Real-Time High Quality Rendering | Online | MW 5:00 PM - 6:15 PM (PT) |

| Spring 2020 | GAMES101: Introduction to Computer Graphics (in Chinese) | Online | TuF 10:00 AM - 11:00 AM (GMT+8) |

| Winter 2020 | CS180: Introduction to Computer Graphics | Phelps 2516 | TuTh 11:00 AM - 12:15 PM |

| Spring 2019 | CS180: Introduction to Computer Graphics | Girvetz 2128 | MW 11:00 AM - 12:15 PM |

| Winter 2019 | CS291A: Real-Time High Quality Rendering | Phelps 3526 | MW 3:30 PM - 5:30 PM |

Ph.D. Dissertation

Physically-based Modeling and Rendering of Complex Visual Appearance

Lingqi Yan, Advised by Ravi Ramamoorthi (Summer 2018)

Doctor of Philosophy in Computer Science in the

Graduate Division of the University of California, Berkeley

2019 ACM SIGGRAPH Outstanding Doctoral Dissertation Award

In this dissertation, we focus on physically-based rendering that synthesizes realistic images from 3D models and scenes. State of the art rendering still struggles with two fundamental challenges --- realism and speed. The rendered results look artificial and overly perfect, and the rendering process is slow for both online and interactive applications. Moreover, better realism and faster speed are inherently contradictory, because the computational complexity increases substantially when trying to render higher fidelity detailed results. We put emphasis on both ends of the realism-speed spectrum in rendering by introducing the concept of detailed rendering and appearance modeling to accurately represent and reproduce the rich visual world from micron level to overall appearance, and combining sparse ray sampling with fast high dimensional filtering to achieve real-time performance.

Dissertation Citation Ceremony SlidesPublications / Technical Reports

Rendering of Subjective Speckle Formed by Rough Statistical Surfaces

Anonymous Authors

Technical Report, archived at UCSB Computer Science Tech Reports for submission, Mar 2020

Tremendous effort has been extended by the Computer Graphics community to advance the level of realism of material appearance reproduction by incorporating increasingly more advanced techniques. We are now able to re-enact the complicated interplay between light and microscopic surface features---scratches, bumps and other imperfections---in a visually convincing fashion. However, diffractive patterns arise even when no explicitly defined features are present: Any random surface will act as a diffracting aperture and its statistics heavily influence the statistics of the diffracted wave fields. Nonetheless, the problem of rendering diffractions induced by surfaces that are defined purely statistically remains wholly unexplored. We present a thorough derivation, from core optical principles, of the intensity of the scattered fields that arise when a natural, partially coherent light source illuminates a random surface. We follow with a probability theory analysis of the statistics of those fields and present our rendering algorithm. All of our derivations are formally proven and verified numerically as well. Our method is the first to render diffractions that produced by a surface described statistically only and bridges the theoretical gap between contemporary surface modelling and rendering. Finally, we also present intuitive artistic control parameters that allow rendering of physical and non-physical diffraction patterns using our method.

Website Paper Videos More Videos (183MB)

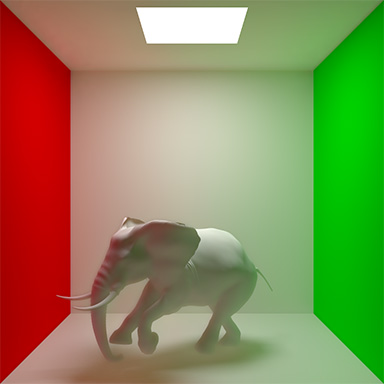

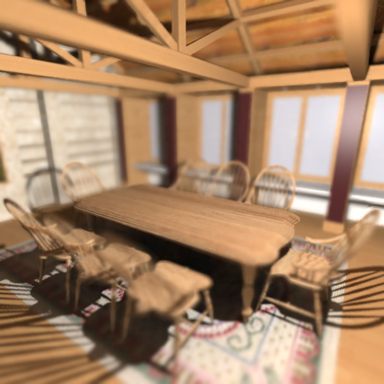

Lightweight Bilateral Convolutional Neural Networks for Real-time Diffuse Global Illumination

Hanggao Xin, Shaokun Zheng, Kun Xu, Ling-Qi Yan

Technical Report, IEEE Transactions on Visualization and Computer Graphics (in submission), Feb 2020

Physically correct, noise-free global illumination is crucial in physically-based rendering, but often takes a long time to compute. Recent approaches have exploited sparse sampling and filtering to accelerate this process but still cannot achieve real-time performance. It is partly due to the time-consuming ray sampling even at 1 sample per pixel, and partly because of the complexity of deep neural networks. To address this problem, we propose a novel method to generate plausible global illumination for dynamic scenes in real-time (>30 FPS). In our method, we first compute direct illumination and then use a lightweight neural network to predict screen space indirect illumination. Our neural network is designed explicitly with bilateral convolution layers and takes only essential information as input (direct illumination, surface normals, and 3D positions). Also, our network maintains the coherence between adjacent image frames efficiently without heavy recurrent connections. Compared to state-of-the-art works, our method produces global illumination of dynamic scenes with higher quality and better temporal coherence and runs at real-time framerates.

Paper Video

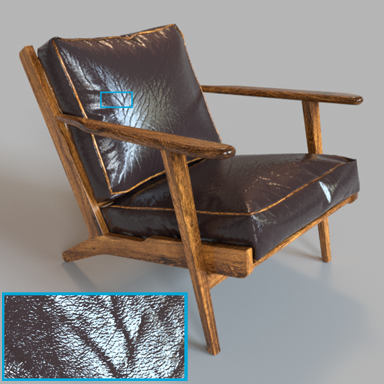

A Bayesian Inference Framework for Procedural Material Parameter Estimation

Yu Guo, Miloš Hašan, Ling-Qi Yan, Shuang Zhao

Technical Report (arXiv:1912.01067), Dec 2019

Procedural material models have been gaining traction in many applications thanks to their flexibility, compactness, and easy editability. In this paper, we explore the inverse rendering problem of procedural material parameter estimation from photographs using a Bayesian framework. We use summary functions for comparing unregistered images of a material under known lighting, and we explore both hand-designed and neural summary functions. In addition to estimating the parameters by optimization, we introduce a Bayesian inference approach using Hamiltonian Monte Carlo to sample the space of plausible material parameters, providing additional insight into the structure of the solution space. To demonstrate the effectiveness of our techniques, we fit procedural models of a range of materials---wall plaster, leather, wood, anisotropic brushed metals and metallic paints---to both synthetic and real target images.

Paper Supplementary

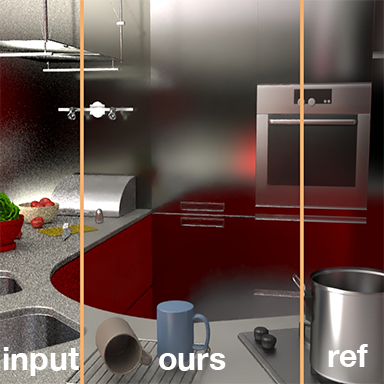

Example-Based Microstructure Rendering with Constant Storage

Beibei Wang, Miloš Hašan, Nicolas Holzschuch, Ling-Qi Yan

Technical Report, ACM Transactions on Graphics (under minor revision)

Rendering glinty details from specular microstructure enhances the level of realism, but previous methods require heavy storage for the high-resolution height field or normal map and associated acceleration structures. In this paper, we aim at dynamically generating theoretically infinite microstructure, preventing obvious tiling artifacts, while achieving constant storage cost. Unlike traditional texture synthesis, our method supports arbitrary point and range queries, and is essentially generating the microstructure implicitly. Our method fits the widely used microfacet rendering framework with multiple importance sampling (MIS), replacing the commonly used microfacet normal distribution functions (NDFs) like GGX by a detailed local solution, with a small amount of runtime performance overhead.

Paper Video

Learning Generative Models for Rendering Specular Microgeometry

Alexandr Kuznetsov, Miloš Hašan, Zexiang Xu, Ling-Qi Yan, Bruce Walter, Nima Khademi Kalantari, Steve Marschner, Ravi Ramamoorthi

ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia 2019)

Rendering specular material appearance is a core problem of computer graphics. While smooth analytical material models are widely used, the high-frequency structure of real specular highlights requires considering discrete, finite microgeometry. Instead of explicit modeling and simulation of the surface microstructure (which was explored in previous work), we propose a novel direction: learning the high-frequency directional patterns from synthetic or measured examples, by training a generative adversarial network (GAN). A key challenge in applying GAN synthesis to spatially varying BRDFs is evaluating the reflectance for a single location and direction without the cost of evaluating the whole hemisphere. We resolve this using a novel method for partial evaluation of the generator network. We are also able to control large-scale spatial texture using a conditional GAN approach. The benefits of our approach include the ability to synthesize spatially large results without repetition, support for learning from measured data, and evaluation performance independent of the complexity of the dataset synthesis or measurement.

Paper Video

GradNet: Unsupervised Deep Screened Poisson Reconstruction for Gradient-Domain Rendering

Jie Guo, Mengtian Li, Quewei Li, Yuting Qiang, Bingyang Hu, Yanwen Guo, Ling-Qi Yan

ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia 2019)

Monte Carlo (MC) methods for light transport simulation are flexible and general but typically suffer from high variance and slow convergence. Gradient-domain rendering alleviates this problem by additionally generating image gradients and reformulating rendering as a screened Poisson image reconstruction problem. To improve the quality and performance of the reconstruction, we propose a novel and practical deep learning based approach in this paper. The core of our approach is a multi-branch auto-encoder, termed GradNet, which end-to-end learns a mapping from a noisy input image and its corresponding image gradients to a high-quality image with low variance. Once trained, our network is fast to evaluate and does not require manually parameter tweaking. Due to the difficulty in preparing ground truth images for training, we design and train our network in a completely unsupervised manner by learning directly from the input data. This is the first solution incorporating unsupervised deep learning into the gradient-domain rendering framework. The loss function is defined as an energy function including a data fidelity term and a gradient fidelity term. To further reduce the noise of the reconstructed image, the loss function is reinforced by adding a regularizer constructed from selected rendering-specific features. We demonstrate that our method improves the reconstruction quality for a diverse set of scenes, and reconstructing a high-resolution image takes far less than one second on a recent GPU.

Paper Supplementary

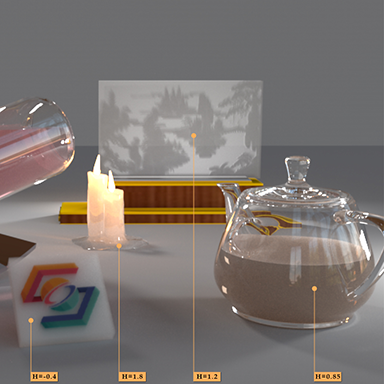

Adaptive BRDF-Oriented Multiple Importance Sampling of Many Lights

Yifan Liu, Kun Xu, Ling-Qi Yan

Proceedings of the Eurographics Symposium on Rendering, 2019

Many-light rendering is becoming more common and important as rendering goes into the next level of complexity. However, to calculate the illumination under many lights, state of the art algorithms are still far from efficient, due to the separate consideration of light sampling and BRDF sampling. To deal with the inefficiency of many-light rendering, we present a novel light sampling method named BRDF-oriented light sampling, which selects lights based on importance values estimated using the BRDF's contributions. Our BRDF-oriented light sampling method works naturally with MIS, and allows us to dynamically determine the number of samples allocated for different sampling techniques. With our method, we can achieve a significantly faster convergence to the ground truth results, both perceptually and numerically, as compared to previous many-light rendering algorithms.

Paper Code

Fractional Gaussian Fields for Modeling and Rendering of Spatially-Correlated Media

Jie Guo, Yanjun Chen, Bingyang Hu, Ling-Qi Yan, Yanwen Guo, Yuntao Liu

ACM Transactions on Graphics (Proceedings of SIGGRAPH 2019)

Transmission of radiation through spatially-correlated media has demonstrated deviations from the classical exponential law of the corresponding uncorrelated media. In this paper, we propose a general, physically-based framework for modeling and rendering such correlated media with non-exponential decay of transmittance. We describe spatial correlations by introducing the Fractional Gaussian Field (FGF), a powerful mathematical tool that has proven useful in many areas but remains under-explored in graphics. With the FGF, we study the effects of correlations in a unified manner, by modeling both high-frequency, noise-like fluctuations and k-th order fractional Brownian motion (fBm) with a stochastic continuity property. As a result, we are able to reproduce a wide variety of appearances stemming from different types of spatial correlations. Compared to previous work, our method is the first that addresses both short-range and long-range correlations using physically-based fluctuation models. We show that our method can simulate different extents of randomness in spatially-correlated media, resulting in a smooth transition in a range of appearances from exponential falloff to complete transparency. We further demonstrate how our method can be integrated into an energy-conserving RTE framework with a well-designed importance sampling scheme and validate its ability compared to the classical transport theory and previous work.

Paper BibTeX Video Supplementary

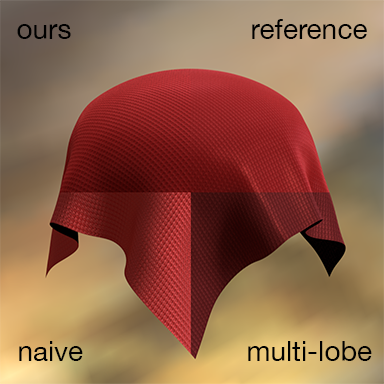

Accurate Appearance Preserving Prefiltering for Rendering Displacement-Mapped Surfaces

Lifan Wu, Shuang Zhao, Ling-Qi Yan, Ravi Ramamoorthi

ACM Transactions on Graphics (Proceedings of SIGGRAPH 2019)

Prefiltering the reflectance of a displacement-mapped surface while preserving its overall appearance is challenging, as smoothing a displacement map causes complex changes of illumination effects such as shadowing-masking and interreflection. In this paper, we introduce a new method that prefilters displacement maps and BRDFs jointly and constructs SVBRDFs at reduced resolutions. These SVBRDFs preserve the appearance of the input models by capturing both shadowing-masking and interreflection effects. To express our appearance-preserving SVBRDFs efficiently, we leverage a new representation that involves spatially varying NDFs and a novel scaling function that accurately captures micro-scale changes of shadowing, masking, and interreflection effects. Further, we show that the 6D scaling function can be factorized into a 2D function of surface location and a 4D function of direction. By exploiting the smoothness of these functions, we develop a simple and efficient factorization method that does not require computing the full scaling function. The resulting functions can be represented at low resolutions (e.g., $4^2$ for the spatial function and $15^4$ for the angular function), leading to minimal additional storage. Our method generalizes well to different types of geometries beyond Gaussian surfaces. Models prefiltered using our approach at different scales can be combined to form mipmaps, allowing accurate and anti-aliased level-of-detail (LoD) rendering.

Paper BibTeX Video Slides Code & Data

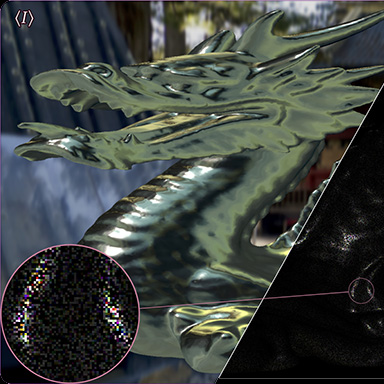

Rendering Specular Microgeometry with Wave Optics

Ling-Qi Yan, Miloš Hašan, Bruce Walter, Steve Marschner, Ravi Ramamoorthi

ACM Transactions on Graphics (Proceedings of SIGGRAPH 2018)

Simulation of light reflection from specular surfaces is a core problem of computer graphics. Most existing solutions either make the approximation of providing only a large-area average solution in terms of a fixed BRDF (ignoring spatial detail), or are based only on geometric optics (which is an approximation to more accurate wave optics), or both. We design the first rendering algorithm based on a wave optics model, but also able to compute spatially-varying specular highlights with high-resolution detail. We compute a wave optics reflection integral over the coherence area; our solution is based on approximating the phase-delay grating representation of a micron-resolution surface heightfield using Gabor kernels. Our results show both single-wavelength and spectral solution to reflection from common everyday objects, such as brushed, scratched and bumpy metals.

Paper BibTeX Video Supplementary Code

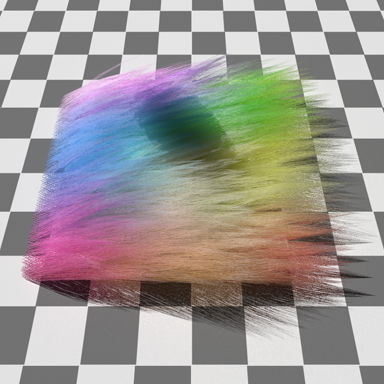

A BSSRDF Model for Efficient Rendering of Fur with Global Illumination

Ling-Qi Yan, Weilun Sun, Henrik Wann Jensen, Ravi Ramamoorthi

ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia 2017)

Physically-based hair and fur rendering is crucial for visual realism. One of the key effects is global illumination, involving light bouncing between different fibers. This is very time-consuming to simulate with methods like path tracing. Efficient approximate global illumination techniques such as dual scattering are in widespread use, but are limited to human hair only, and cannot handle color bleeding, transparency and hair-object inter-reflection.

We present the first global illumination model, based on dipole diffusion for subsurface scattering, to approximate light bouncing between individual fur fibers. We model complex light and fur interactions as subsurface scattering, and use a simple neural network to convert from fur fibers' properties to scattering parameters. Our network is trained on only a single scene with different parameters, but applies to general scenes and produces visually accurate appearance, supporting color bleeding and further inter-reflections.

Multiple Axis-Aligned Filters for Rendering of Combined Distribution Effects

Lifan Wu, Ling-Qi Yan, Alexandr Kuznetsov, Ravi Ramamoorthi

Proceedings of the Eurographics Symposium on Rendering, 2017

Distribution effects such as diffuse global illumination, soft shadows and depth of field, are most accurately rendered using Monte Carlo ray or path tracing. However, physically accurate algorithms can take hours to converge to a noise-free image. A recent body of work has begun to bridge this gap, showing that both individual and multiple effects can be achieved accurately and efficiently. These methods use sparse sampling, GPU raytracers, and adaptive filtering for reconstruction. They are based on a Fourier analysis, which models distribution effects as a wedge in the frequency domain. The wedge can be approximated as a single large axis-aligned filter, which is fast but retains a large area outside the wedge, and therefore requires a higher sampling rate; or a tighter sheared filter, which is slow to compute. The state-of-the-art fast sheared filtering method combines low sampling rate and efficient filtering, but has been demonstrated for individual distribution effects only, and is limited by high-dimensional data storage and processing.

We present a novel filter for efficient rendering of combined effects, involving soft shadows and depth of field, with global (diffuse indirect) illumination. We approximate the wedge spectrum with multiple axis-aligned filters, marrying the speed of axis-aligned filtering with an even more accurate (compact and tighter) representation than sheared filtering. We demonstrate rendering of single effects at comparable sampling and frame-rates to fast sheared filtering. Our main practical contribution is in rendering multiple distribution effects, which have not even been demonstrated accurately with sheared filtering. For this case, we present an average speedup of 6× compared with previous axis-aligned filtering methods.

An Efficient and Practical Near and Far Field Fur Reflectance Model

Ling-Qi Yan, Henrik Wann Jensen, Ravi Ramamoorthi

ACM Transactions on Graphics (Proceedings of SIGGRAPH 2017)

Physically-based fur rendering is difficult. Recently, structural differences between hair and fur fibers have been revealed by Yan et al. [2015], who showed that fur fibers have an inner scattering medulla, and developed a double cylinder model. However, fur rendering is still complicated due to the complex scattering paths through the medulla. We develop a number of optimizations that improve efficiency and generality without compromising accuracy, leading to a practical fur reflectance model. We also propose a key contribution to support both near and far-field rendering, and allow smooth transitions between them.

Specifically, we derive a compact BCSDF model for fur reflectance with only 5 lobes. Our model unifies hair and fur rendering, making it easy to implement within standard hair rendering software, since we keep the traditional R, TT, and TRT lobes in hair, and only add two extensions to scattered lobes, TT^s and TRT^s. Moreover, we introduce a compression scheme using tensor decomposition to dramatically reduce the precomputed data storage for scattered lobes to only 150 KB, with minimal loss of accuracy. By exploiting piecewise analytic integration, our method further enables a multi-scale rendering scheme that transitions between near and far field rendering smoothly and efficiently for the first time, leading to 6-8x speed up over previous work.

Antialiasing Complex Global Illumination Effects in Path-space

Laurent Belcour, Ling-Qi Yan, Ravi Ramamoorthi, Derek Nowrouzezahrai

ACM Transactions on Graphics, 2016

We present the first method to efficiently and accurately predict antialiasing footprints to pre-filter color-, normal-, and displacement-mapped appearance in the context of multi-bounce global illumination. We derive Fourier spectra for radiance and importance functions that allow us to compute spatial-angular filtering footprints at path vertices, for both uni- and bi-directional path construction. We then use these footprints to antialias reflectance modulated by high-resolution color, normal, and displacement maps encountered along a path. In doing so, we also unify the traditional path-space formulation of light-transport with our frequency-space interpretation of global illumination pre-filtering. Our method is fully compatible with all existing single bounce pre-filtering appearance models, not restricted by path length, and easy to implement atop existing path-space renderers. We illustrate its effectiveness on several radiometrically complex scenarios where previous approaches either completely fail or require orders of magnitude more time to arrive at similarly high-quality results.

Paper BibTeX Video

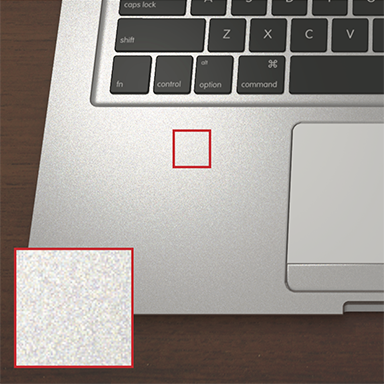

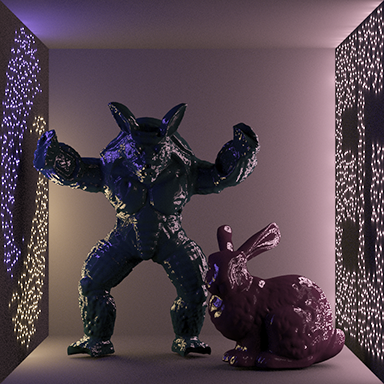

Position-Normal Distributions for Efficient Rendering of Specular Microstructure

Ling-Qi Yan, Miloš Hašan, Steve Marschner, Ravi Ramamoorthi

ACM Transactions on Graphics (Proceedings of SIGGRAPH 2016)

Specular BRDF rendering traditionally approximates surface microstructure using a smooth normal distribution, but this ignores glinty effects, easily observable in the real world. While modeling the actual surface microstructure is possible, the resulting rendering problem is prohibitively expensive. Recently, Yan et al. [2014] and Jakob et al. [2014] made progress on this problem, but their approaches are still expensive and lack full generality in their material and illumination support. We introduce an efficient and general method that can be easily integrated in a standard rendering system. We treat a specular surface as a four-dimensional position-normal distribution, and fit this distribution using millions of 4D Gaussians, which we call elements. This leads to closed-form solutions to the required BRDF evaluation and sampling queries, enabling the first practical solution to rendering specular microstructure.

Paper BibTeX Video Code

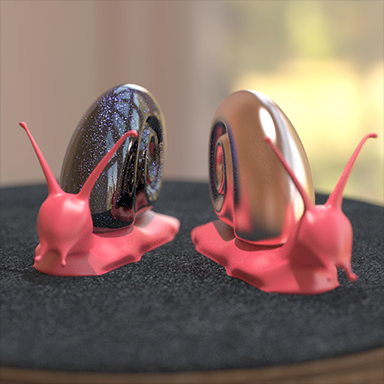

Physically-Accurate Fur Reflectance: Modeling, Measurement and Rendering

Ling-Qi Yan, Chi-Wei Tseng, Henrik Wann Jensen, Ravi Ramamoorthi

ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia 2015)

Rendering photo-realistic animal fur is a long-standing problem in computer graphics. Considerable effort has been made on modeling the geometric complexity of fur, but the reflectance of fur fibers is not well understood. Fur has a distinct diffusive and saturated appearance, that is not captured by either the Marschner hair model or the Kajiya-Kay model. In this paper, we develop a physically-accurate reflectance model for fur fibers. Based on anatomical literature and measurements, we develop a double cylinder model for the reflectance of a single fur fiber, where an outer cylinder represents the biological observation of a cortex covered by multiple cuticle layers, and an inner cylinder represents the scattering interior structure known as the medulla. Our key contribution is to model medulla scattering accurately --- in contrast, for human hair, the medulla has minimal width and thus negligible contributions to the reflectance. Medulla scattering introduces additional reflection and transmission paths, as well as diffusive reflectance lobes. We validate our physical model with measurements on real fur fibers, and introduce the first database in computer graphics of reflectance profiles for nine fur samples. We show that our model achieves significantly better fits to the measured data than the Marschner hair reflectance model. For efficient rendering, we develop a method to precompute 2D medulla scattering profiles and analytically approximate our reflectance model with factored lobes. The accuracy of the approach is validated by comparing our rendering model to full 3D light transport simulations. Our model provides an enriched set of controls, where the parameters we fit can be directly used to render realistic fur, or serve as a starting point from which artists can manually tune parameters for desired appearances.

Paper BibTeX Video Database

Fast 4D Sheared Filtering for Interactive Rendering of Distribution Effects

Ling-Qi Yan, Soham Uday Mehta, Ravi Ramamoorthi, Fredo Durand

ACM Transactions on Graphics, 2015

Soft shadows, depth of field, and diffuse global illumination are common distribution effects, usually rendered by Monte Carlo ray tracing. Physically correct, noise-free images can require hundreds or thousands of ray samples per pixel, and take a long time to compute. Recent approaches have exploited sparse sampling and filtering; the filtering is either fast (axis-aligned), but requires more input samples, or needs fewer input samples but is very slow (sheared). We present a new approach for fast sheared filtering on the GPU. Our algorithm factors the 4D sheared filter into four 1D filters. We derive complexity bounds for our method, showing that the per-pixel complexity is reduced from O(n^2 l^2) to O(nl), where n is the linear filter width (filter size is O(n^2)) and l is the (usually very small) number of samples for each dimension of the light or lens per pixel (spp is l^2). We thus reduce sheared filtering overhead dramatically. We demonstrate rendering of depth of field, soft shadows and diffuse global illumination at interactive speeds. We reduce the number of samples needed by 5-8x, compared to axis-aligned filtering, and framerates are 4x faster for equal quality.

Paper BibTeX Video Code Snippet

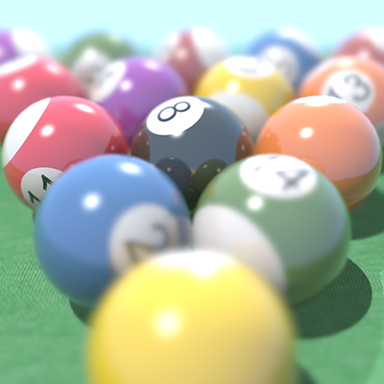

Rendering Glints on High-Resolution Normal-Mapped Specular Surfaces

Ling-Qi Yan*, Miloš Hašan*, Wenzel Jakob, Jason Lawrence, Steve Marschner, Ravi Ramamoorthi

(*: dual first authors)

ACM Transactions on Graphics (Proceedings of SIGGRAPH 2014)

Rendering a complex specular surface under sharp point lighting is far from easy. Using Monte Carlo point sampling for this purpose is impractical: the energy is concentrated in tiny highlights that take up a minuscule fraction of the pixel. We instead compute the accurate solution that Monte Carlo would eventually converge to, using a completely different deterministic approach with minimal approximations. Our method considers the true distribution of normals on a surface patch seen through a single pixel, which can be highly complicated. This requires computing the probability density of the given normal coming from anywhere on the patch. We show how to evaluate this efficiently, assuming a Gaussian surface patch and Gaussian intrinsic roughness. We also take advantage of hierarchical pruning of position-normal space to quickly find texels that might contribute to a given normal distribution evaluation. Our results show complicated, temporally varying glints from materials such as bumpy plastics, brushed and scratched metals, metallic paint and ocean waves.

Paper BibTeX Video Supplementary Code

Discrete Stochastic Microfacet Models

Wenzel Jakob, Miloš Hašan, Ling-Qi Yan, Jason Lawrence, Ravi Ramamoorthi, Steve Marschner

ACM Transactions on Graphics (Proceedings of SIGGRAPH 2014)

This paper investigates rendering glittery surfaces, ones which exhibit shifting random patterns of glints as the surface or viewer moves. It applies both to dramatically glittery surfaces that contain mirror-like flakes and also to rough surfaces that exhibit more subtle small scale glitter, without which most glossy surfaces appear too smooth in close-up. These phenomena can in principle be simulated by high-resolution normal maps, but maps with tiny features create severe aliasing problems under narrow-angle illumination. In this paper we present a stochastic model for the effects of random subpixel structures that generates glitter and spatial noise that behave correctly under different illumination conditions and viewing distances, while also being temporally coherent so that they look right in motion. The model is based on microfacet theory, but it replaces the usual continuous microfacet distribution with a discrete distribution of scattering particles on the surface. A novel stochastic hierarchy allows efficient evaluation in the presence of large numbers of random particles, without ever having to consider the particles individually. This leads to a multiscale procedural BRDF that is readily implemented in standard rendering systems, and which converges back to the smooth case in the limit.

Paper BibTeX Video

Accurate Translucent Material Rendering under Spherical Gaussian Lights

Ling-Qi Yan, Yahan Zhou, Kun Xu, Rui Wang

Computer Graphics Forum (Proceedings of Pacific Graphics 2012)

In this paper we present a new algorithm for accurate rendering of translucent materials under Spherical Gaussian (SG) lights. Our algorithm builds upon the quantized-diffusion BSSRDF model recently introduced in [dI11]. Our main contribution is an efficient algorithm for computing the integral of the BSSRDF with an SG light. We incorporate both single and multiple scattering components. Our model improves upon previous work by accounting for the incident angle of each individual SG light. This leads to more accurate rendering results, notably elliptical profiles from oblique illumination. In contrast, most existing models only consider the total irradiance received from all lights, hence can only generate circular profiles. Experimental results show that our method is suitable for rendering of translucent materials under finite-area lights or environment lights that can be approximated by a small number of SGs.

Paper BibTeX SlidesMisc

I am a huge fan of video games. In fact, this is the reason why I made up my mind to take rendering and Computer Graphics as my lifelong career when I was in primary school. We had a Hearthstone team at UC Berkeley and we made to the playoffs in the TeSPA Hearthstone Collegiate National Championship. I used to play PUBG but recently our team (FYI, SoEasy) has shifted interest towards Apex Legends, and we hit Platinum in the second season. Here is a screenshot of chicken dinner we had recently.

I play piano a little bit, but classic only (with a National Piano Certificate of Level 10 in China, topmost for non-professional amateurs). I'm especially fond of Chopin's. Here are some short recordings of my home performance (F. Chopin: Waltz Op. 64. No. 2 in C-Sharp Minor, Nocturne Op. 9 No. 1 in B-Flat Minor and Op. 9 No. 2 in E-Flat Major). I'll upload more as my website continues to update.

My legal name spelling should be Lingqi Yan, and I only use Ling-Qi Yan for publications (due to some lab traditions at Tsinghua University). My name is pronounced as Ling--Chi--Yen, and here are some funny mistakes about how people usually call me (and I like them all).

"Lingqi Yan, first of his name, the unrejected, author of seven papers, breaker of the record, and the chicken eater." -- Born to be Legendary, by Lifan Wu @ UCSD.